Raspberry Pi boosts Pi 5 performance with SDRAM tuning

tl;dr Raspberry Pi engineers tweaked SDRAM timings and other memory settings on the Pi, resulting in a 10-20% speed boost at the default 2.4 GHz clock. I of course had to test overclocking, which got me a 32% speedup at 3.2 GHz! Changes may roll out in a firmware update for all Pi 5 and Pi 4 users soon.

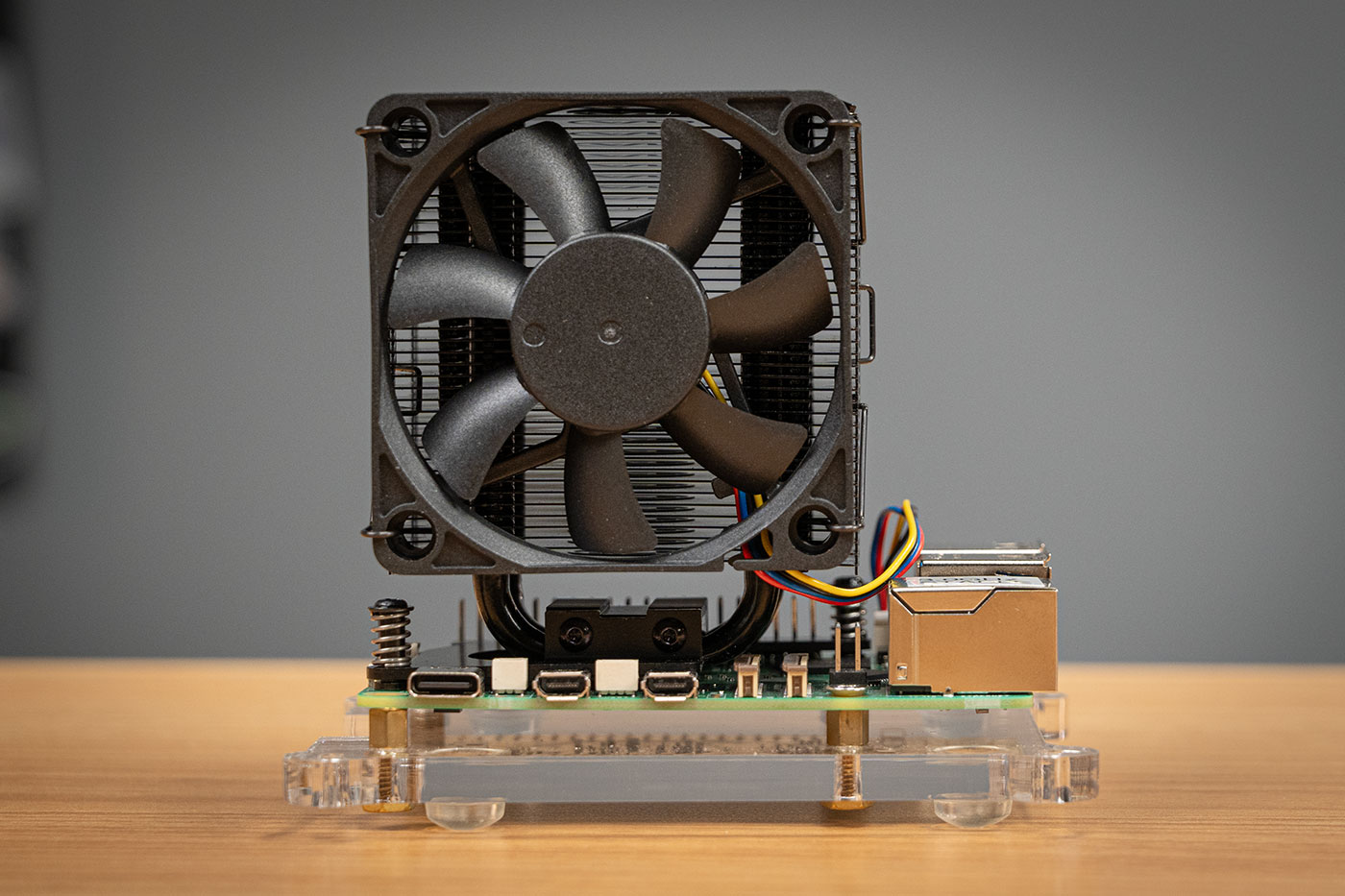

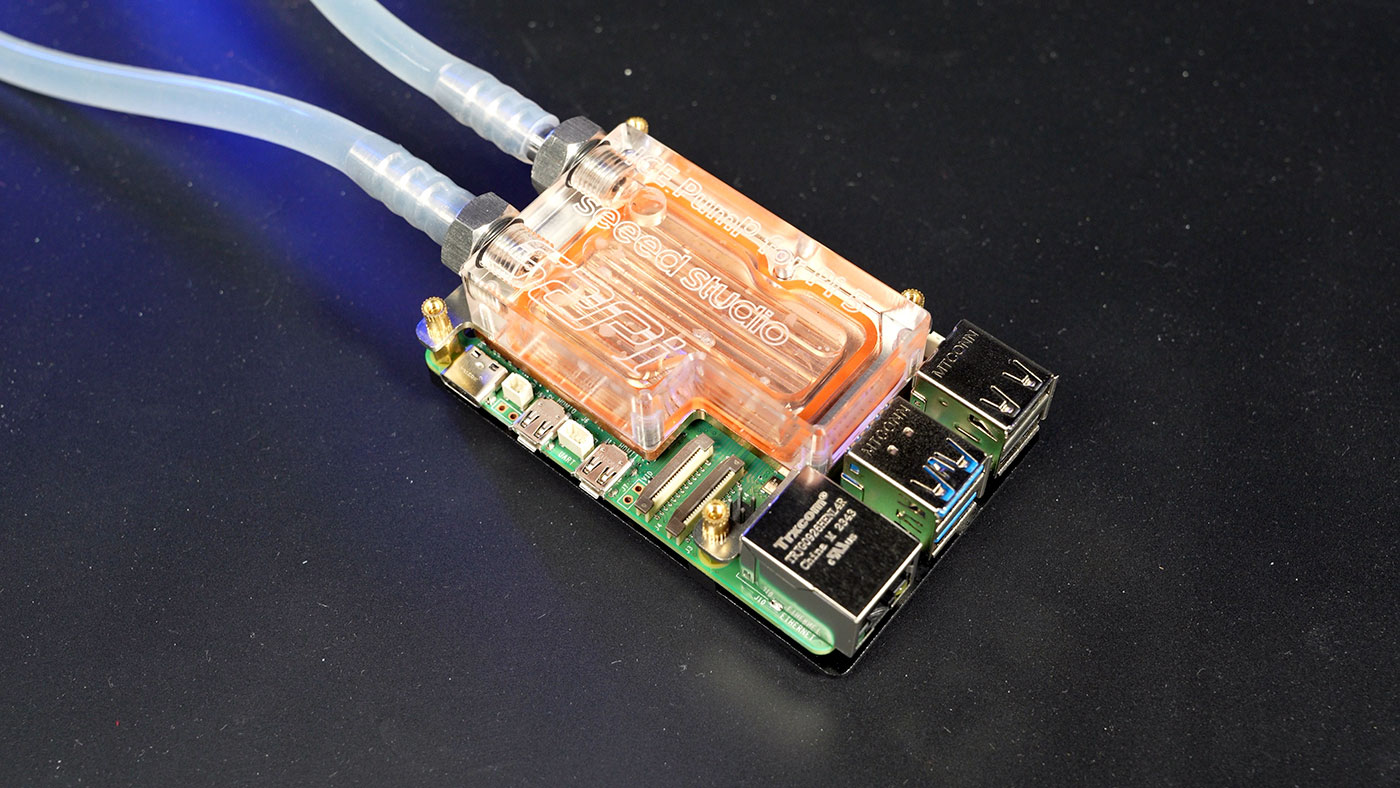

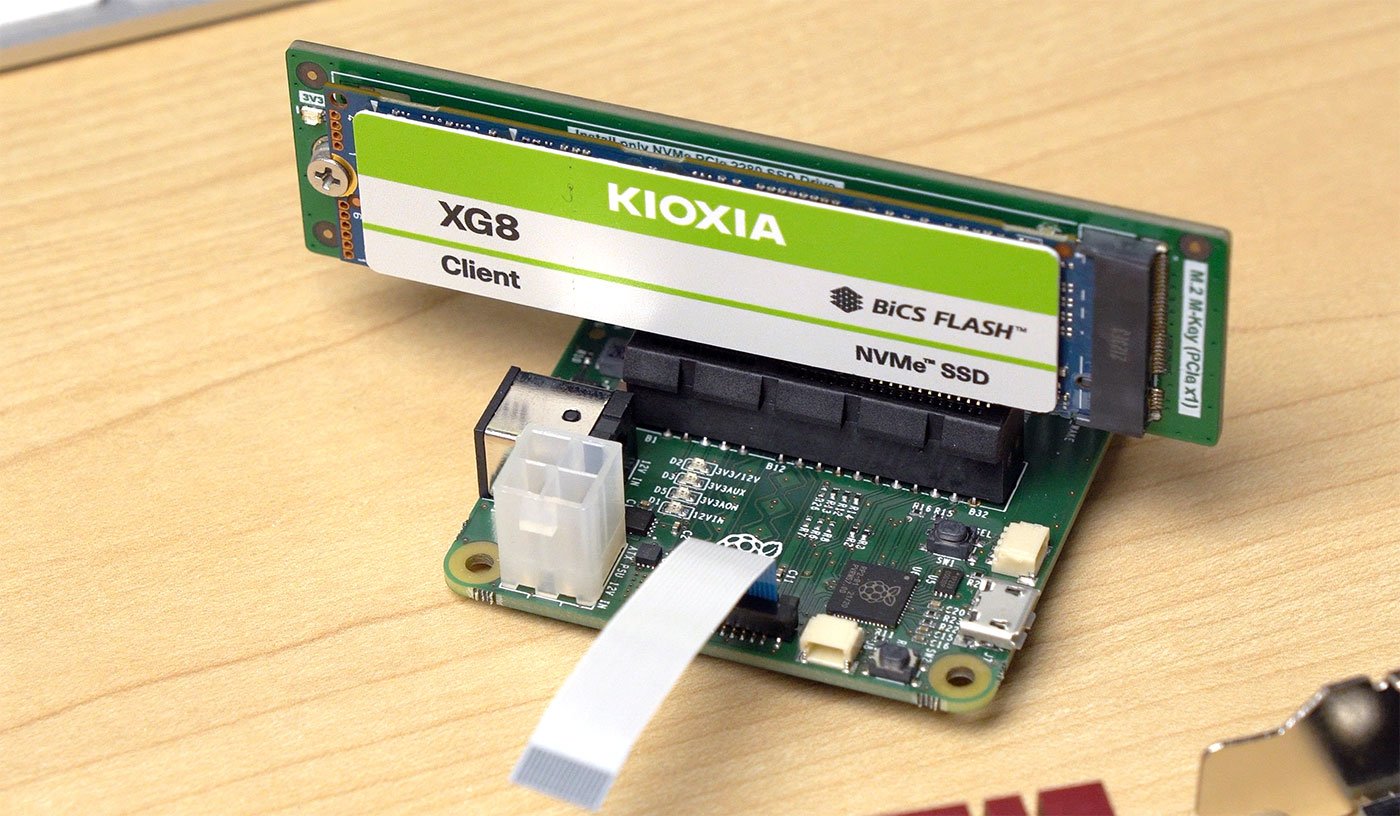

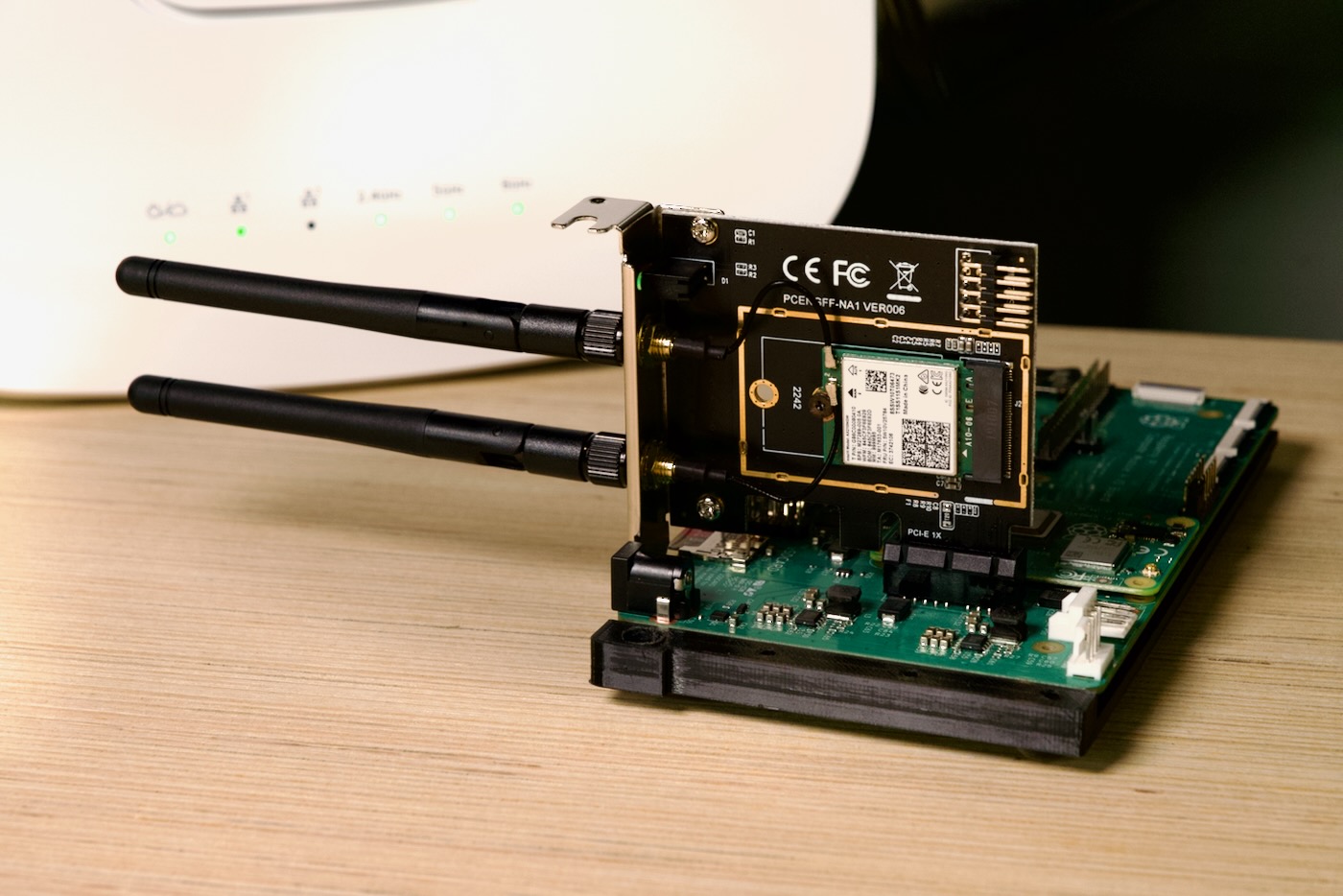

My quest for the world record Geekbench 6 score on a Pi 5 continues, as a couple months ago Martin Rowan used cooling and NUMA emulation tricks to beat my then-record score.