Monitoring my ASUS RT-AX86U Router with Prometheus and Grafana

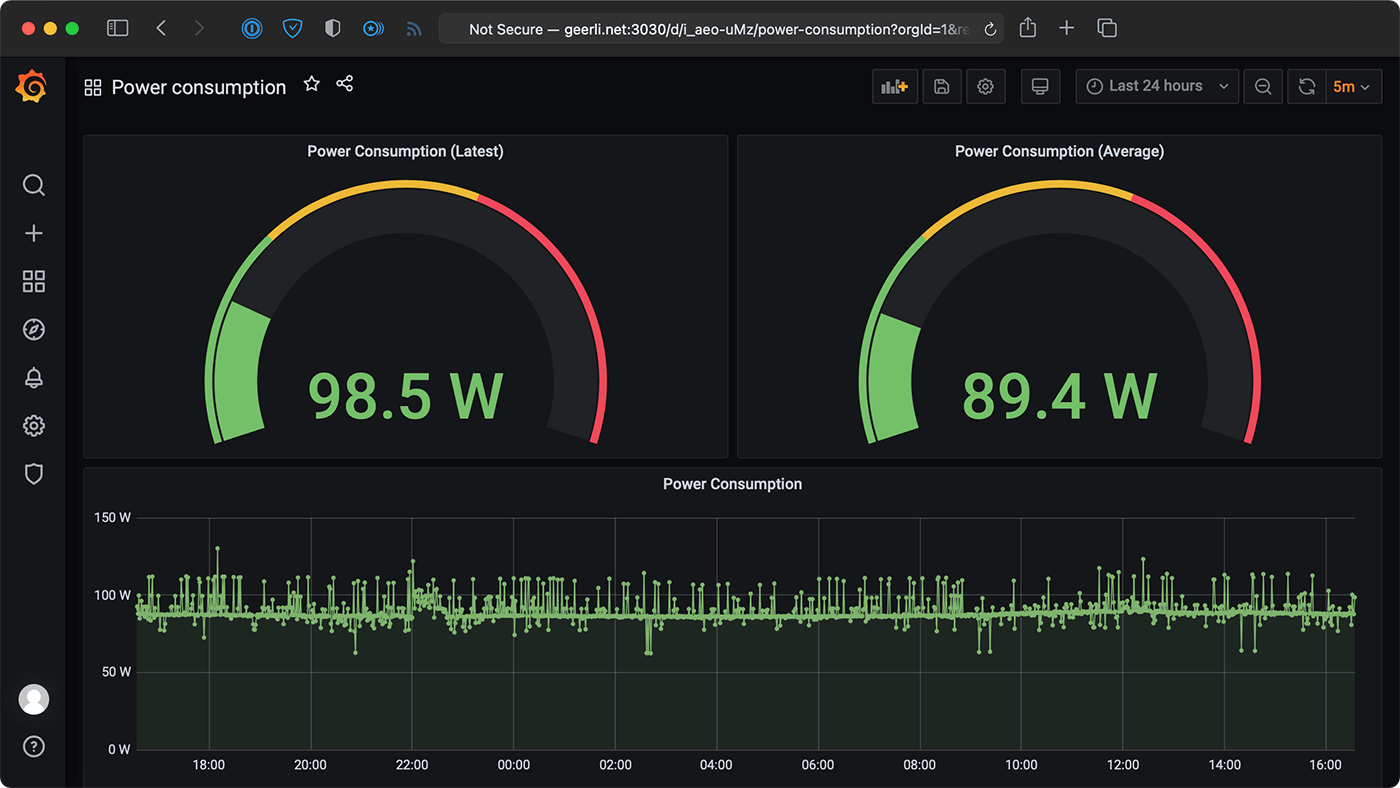

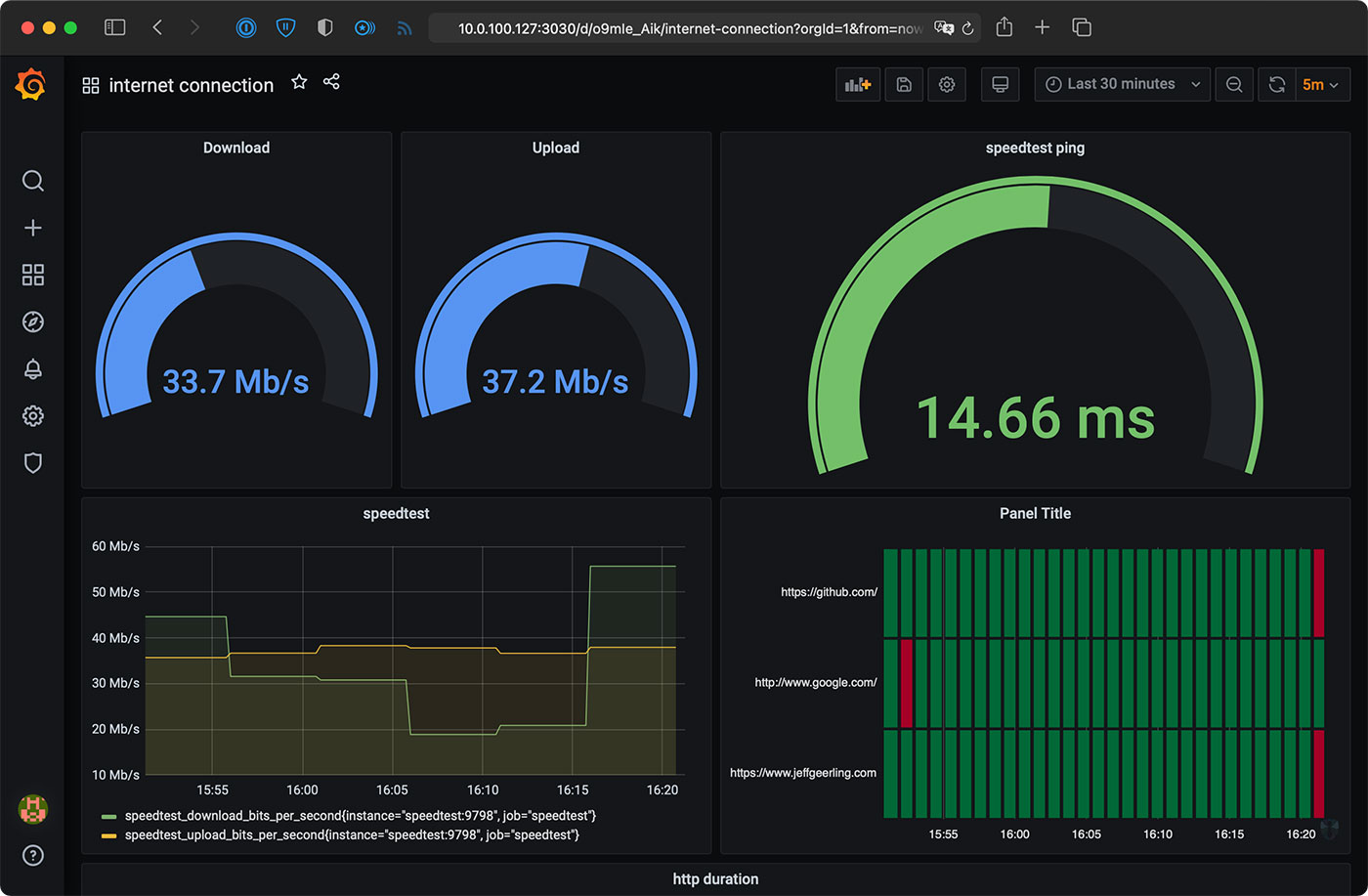

I've been running my Internet Monitoring Pi for a year or so, and it's nice to collect data on Internet performance from inside my network.

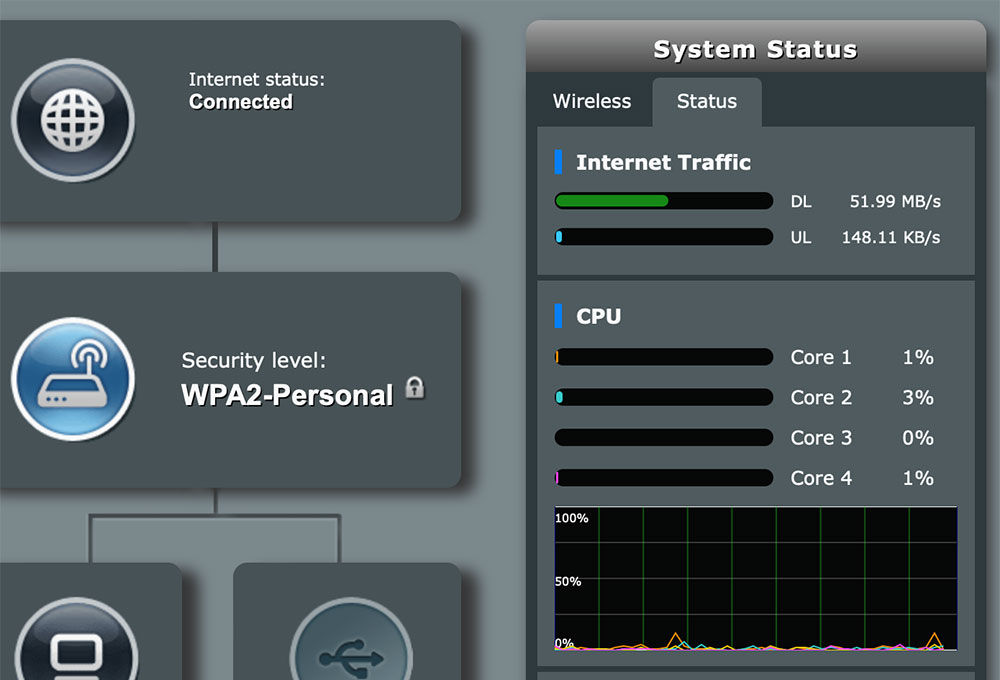

But my router—currently an ASUS RT-AX86U—also tracks its own metrics for inbound and outbound traffic, among other things:

Sometimes having the raw data from the router that's on the edge of the network can tell a different story than measuring things behind the router. So I want to grab this data and put it into Prometheus.