Building a tiny 6-drive M.2 NAS with the Rock 5 model B

As promised in my video comparing SilverTip Lab's DIY Pocket NAS (express your interest here) to the ASUSTOR Flashstor 12 Pro, this blog post outlines how I built a 6-drive M.2 NAS with the Rock 5 model B.

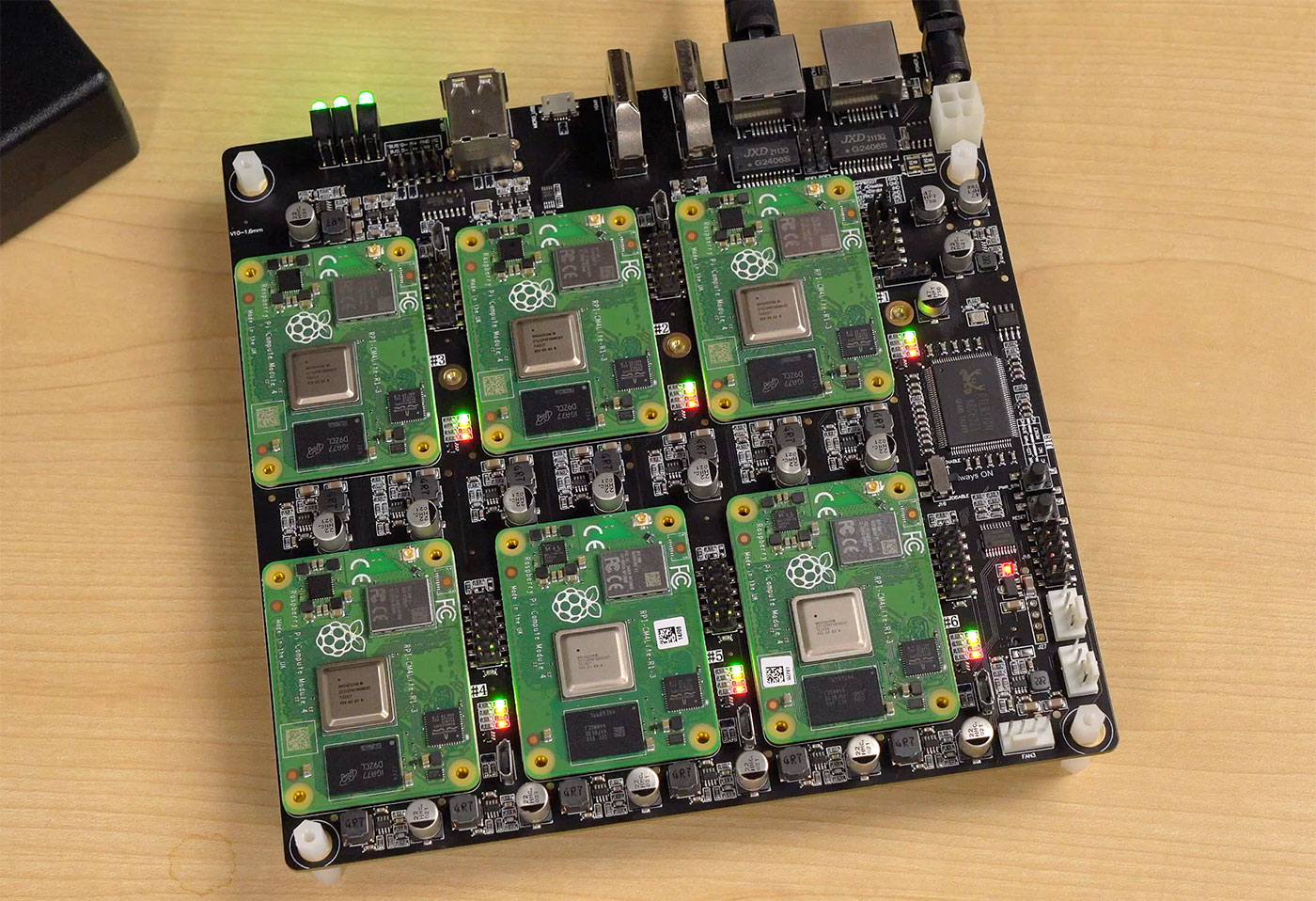

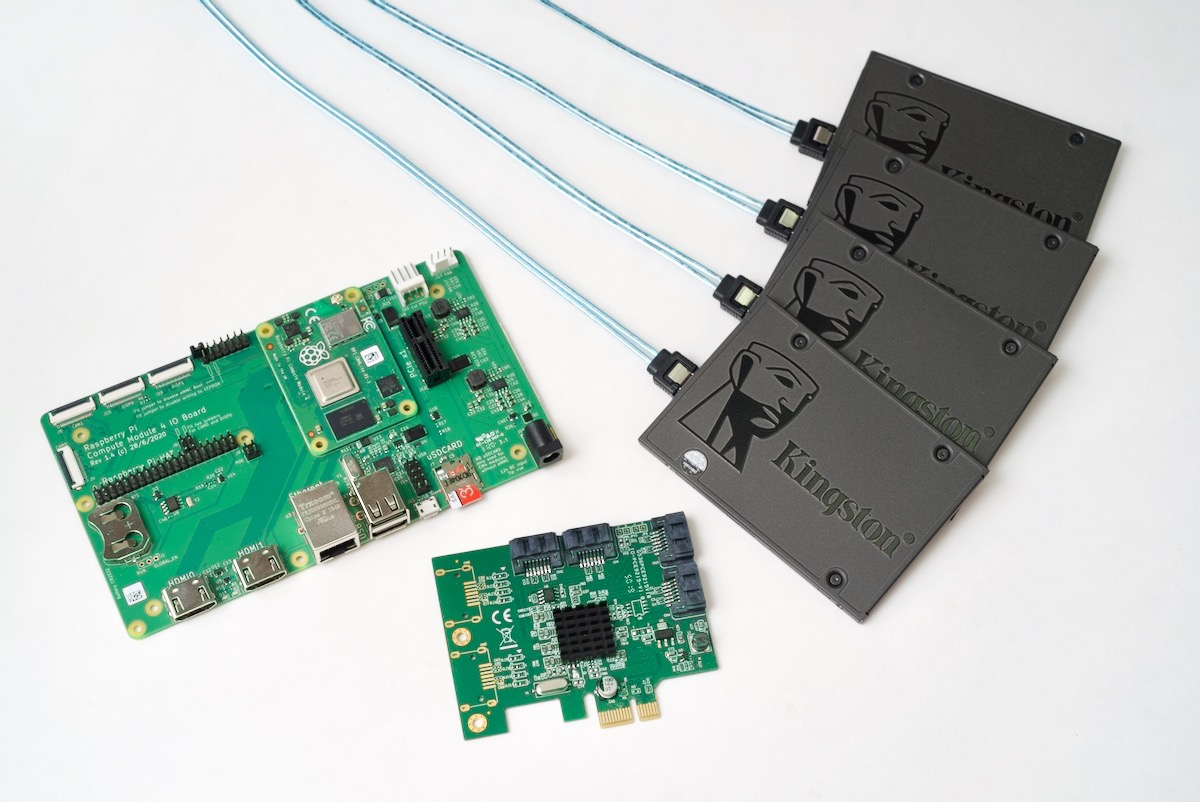

The Rockchip RK3588 SoC on the Rock 5 packs an 8-core CPU (4x A76, 4x A55, in a 'big.LITTLE' configuration). This SoC powers a PCIe Gen 3 x4 M.2 slot on the back, which is used in this tiny 6-drive design to make a compact, but fast, all-flash NAS: