Pi modder successfully adds M.2 slot to Pi 500

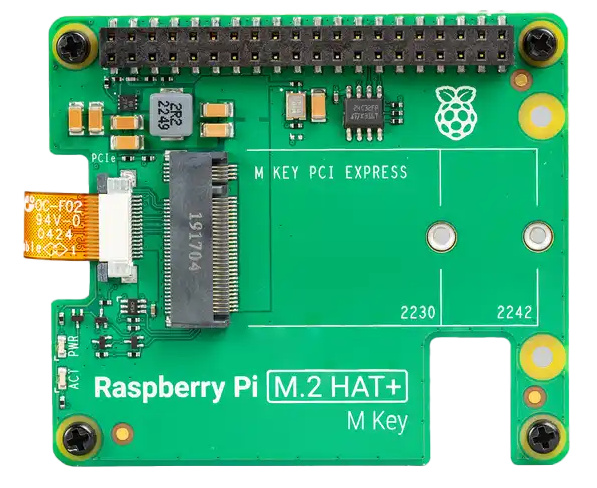

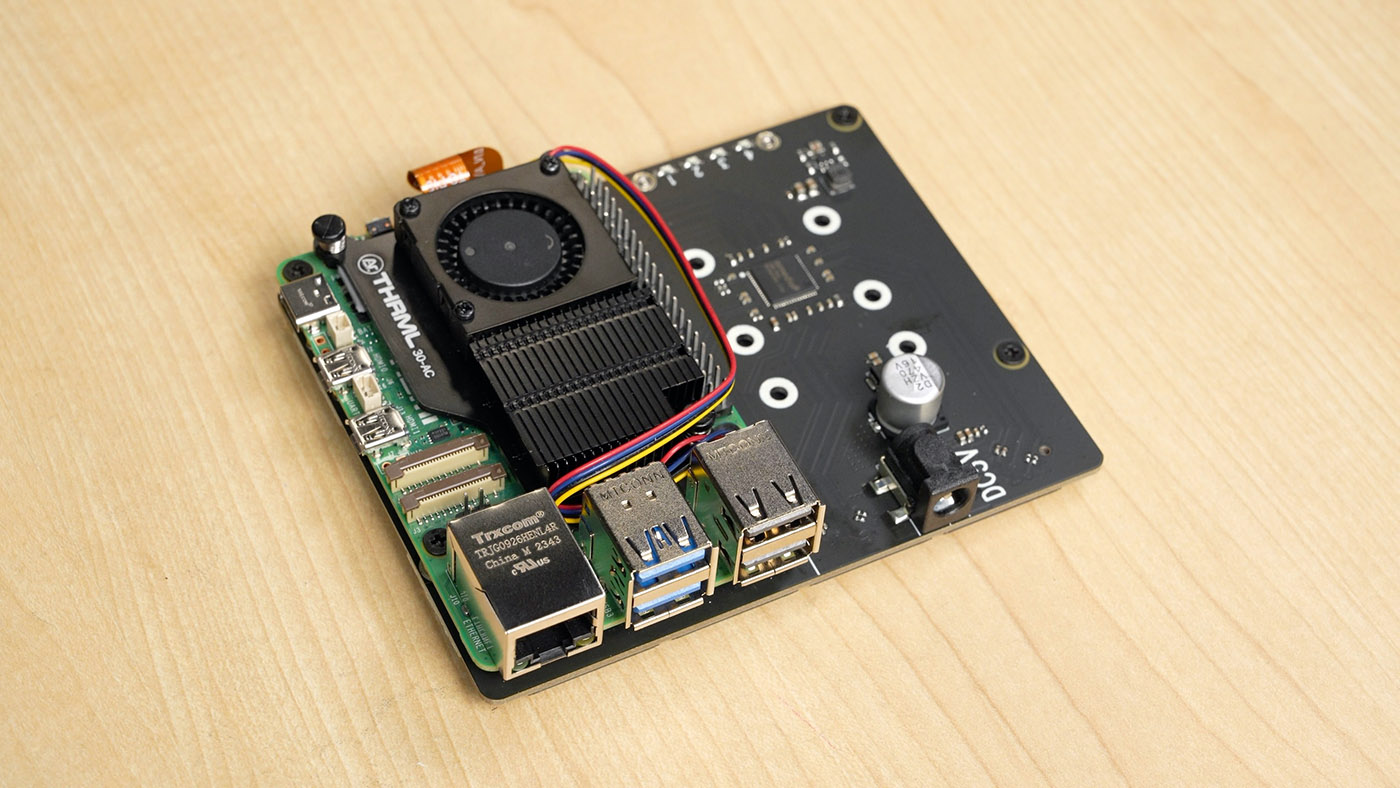

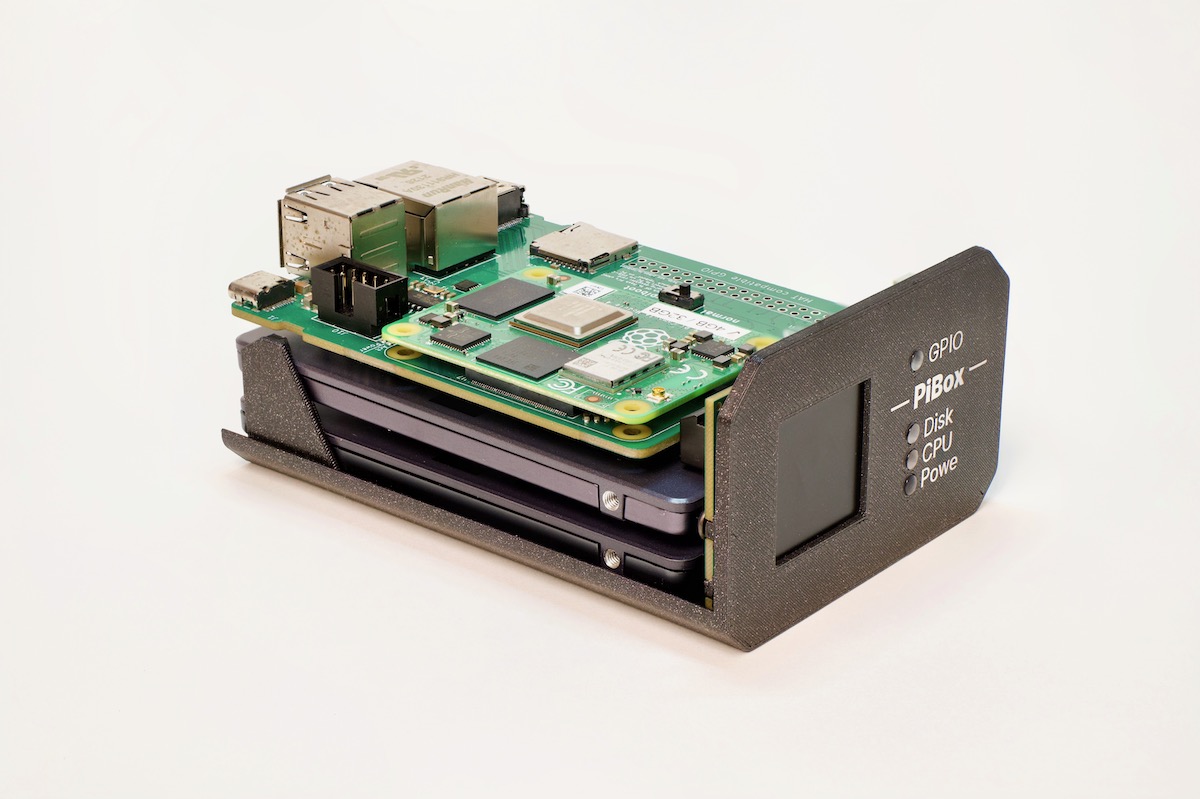

As I briefly mentioned yesterday, someone mentioned in this blog's comments a successful M.2 socket installation on the empty header on the Pi 500 (something I attempted, rather poorly!). With a few added components, and 3.3V supplied to a pad on the bottom via a bench power supply, the M.2 slot works just fine, allowing the use of NVMe SSDs or other PCIe devices.

Indeed, this person emailed me further proof, along with notes for anyone wishing to follow in their footsteps.

First, solder on four minuscule capacitors (rating may be gleaned off the CM5 IO Board schematics, I think?) on the PCIe lines heading to the NVMe slot. These are incredibly small, so a good microscope and decent SMD soldering skills are pretty necessary.