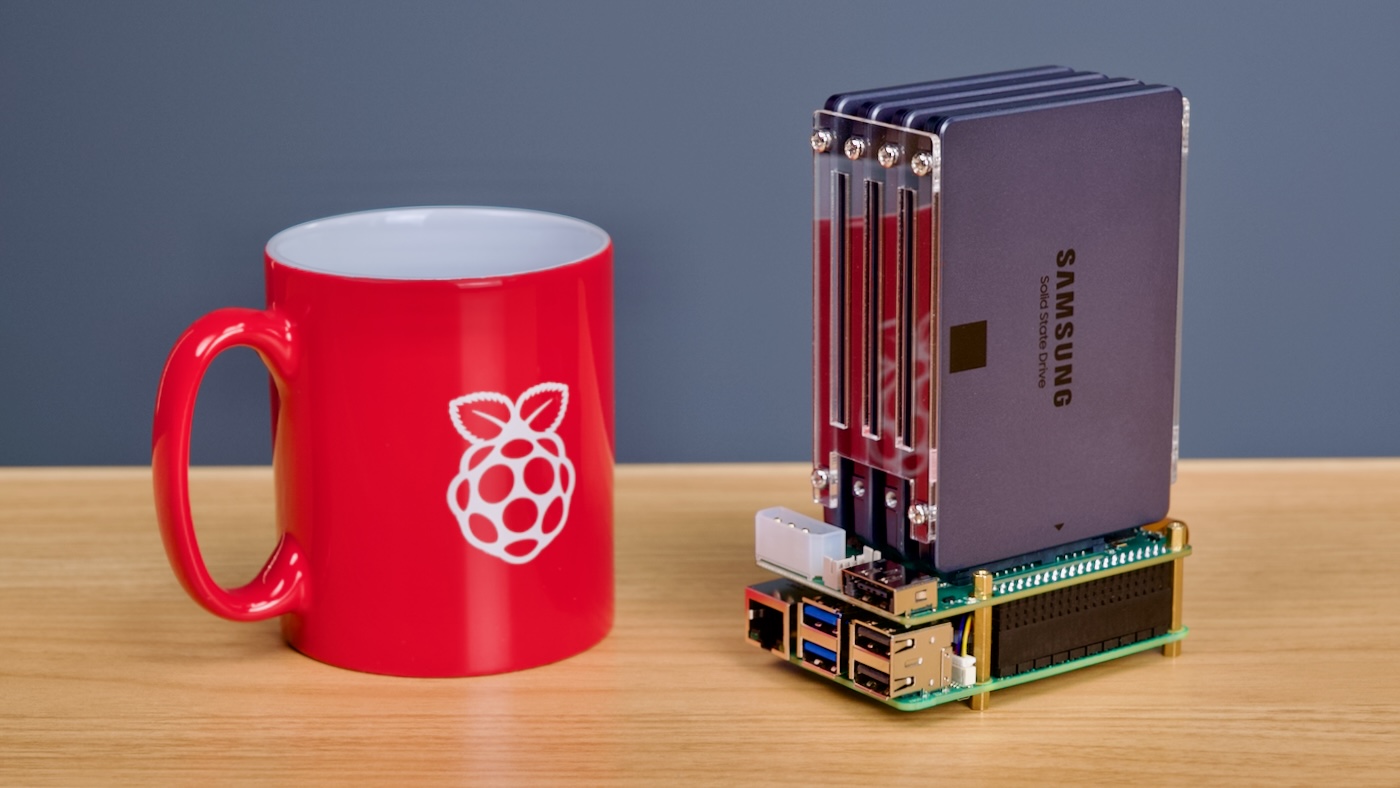

Radxa's SATA HAT makes compact Pi 5 NAS

Radxa's latest iteration of its Penta SATA HAT has been retooled to work with the Raspberry Pi 5.

The Pi 5 includes a PCIe connector, which allows the SATA hat to interface directly via a JMB585 SATA to PCIe bridge, rather than relying on the older Dual/Quad SATA HAT's SATA-to-USB-to-PCIe setup.

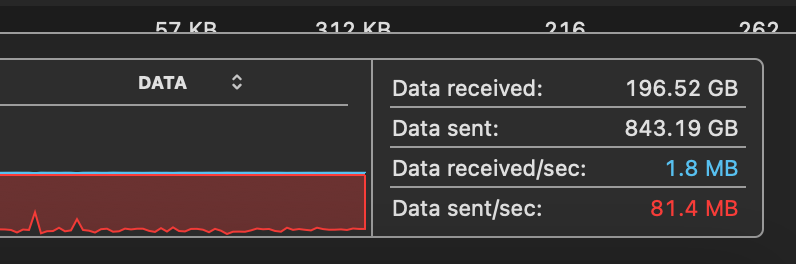

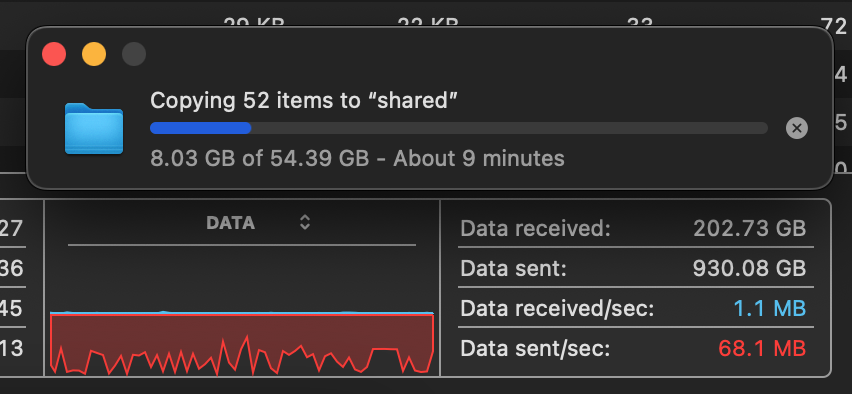

Does the direct PCIe connection help? Yes.

Is the Pi 5 noticeably faster than the Pi 4 for NAS applications? Yes.

Is the Pi 5 + Penta SATA HAT the ultimate low-power NAS solution? Maybe.