AWS S3 Glacier Deep Archive - Difficulty deleting files with accents

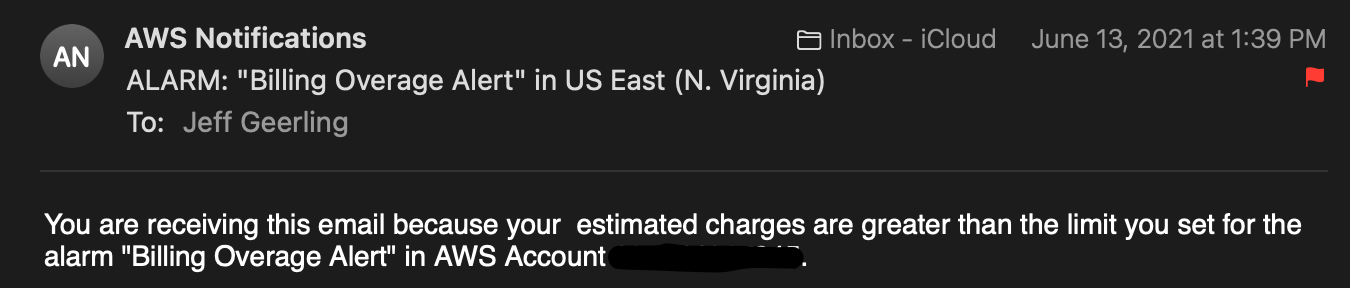

A few days ago, my personal AWS account's billing alert fired, and delivered me an email saying I'd already exceeded my personal comfort threshold—in the second week of the month!

Knowing that I had just rearranged my entire backup plan because I wanted to change the structure of my archives both locally and in my S3 Glacier Deep Archive mirror on AWS, I suspected something didn't get moved or deleted within my backup S3 bucket.

And I was right.

But I wanted to write this up for two reasons: