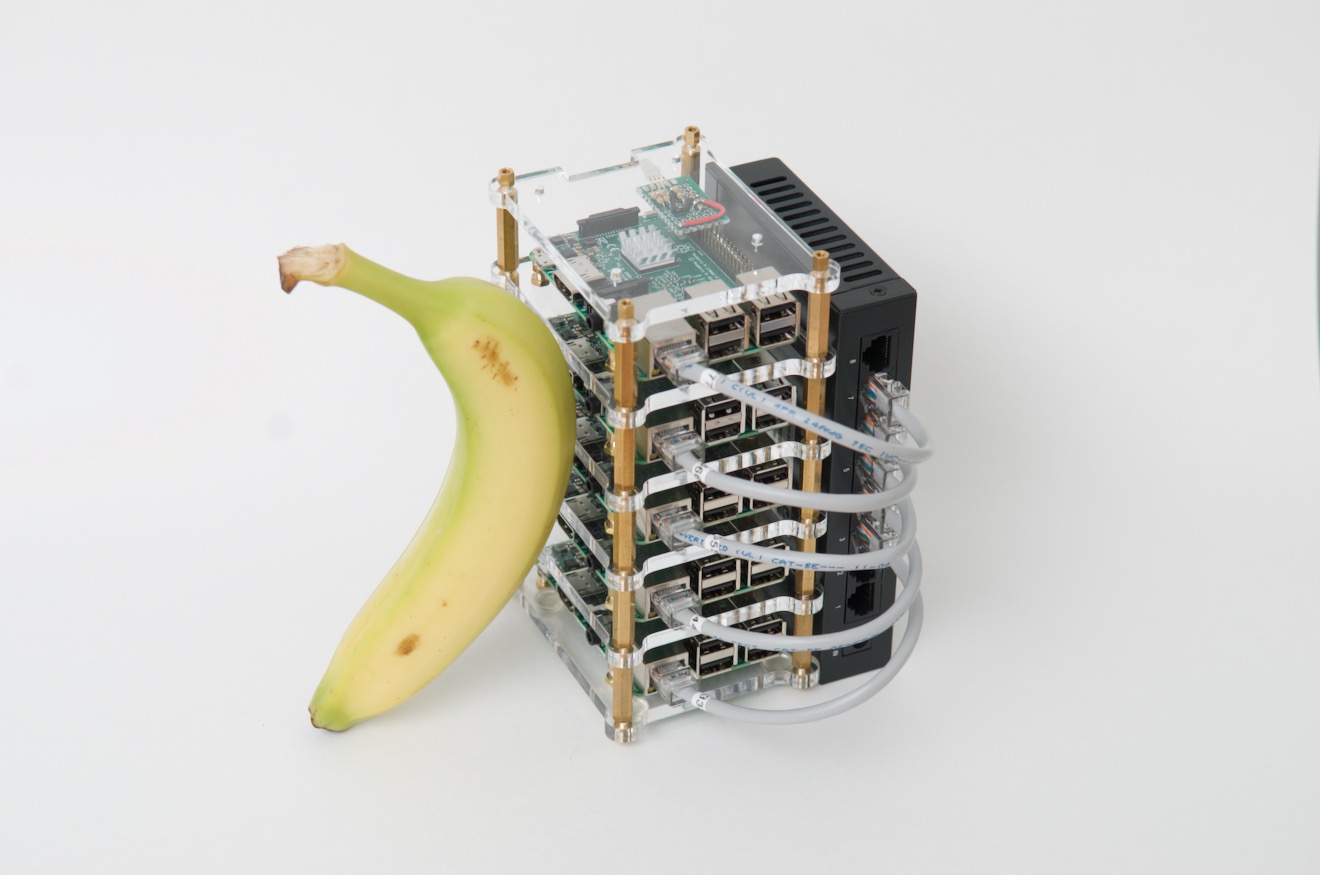

After I posted my Raspberry Pi Blade server video last week, lots of commenters asked what you'd do with a Pi cluster. Many asked out of curiosity, while others seemed to shudder at the very idea of a Pi cluster, because obviously a cheap PC would perform better... right?

Before we go any further, I'd say probably 90 percent of my readers shouldn't build a Pi cluster.

But some of you should. Why?

Well, the first thing I have to clear up is what a Pi cluster isn't.

Note: This blog post corresponds to my YouTube video of the same name: Why would you build a Raspberry Pi Cluster?. Go watch the video on YouTube if you'd rather watch the video instead of reading this post!

What a Pi cluster CAN'T do

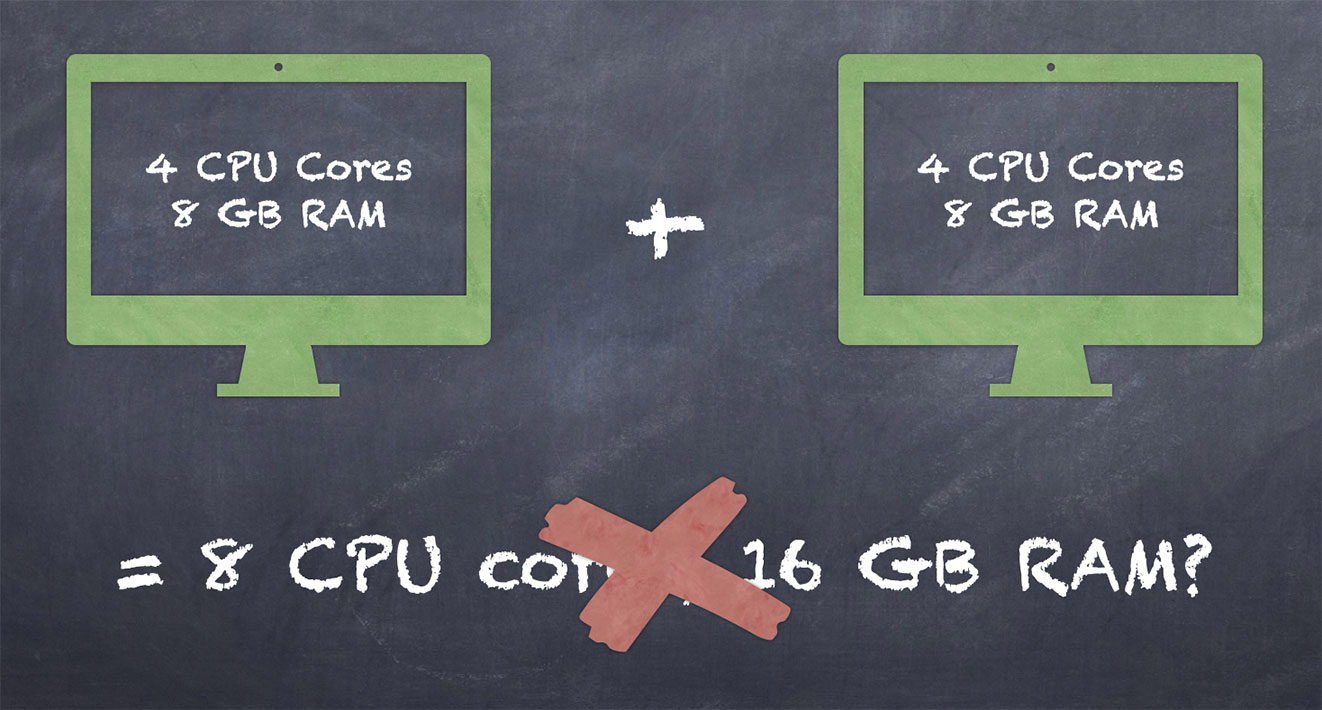

Some people think when you put together two computers in a cluster, let's say both of them having 4 CPU cores and 8 gigs of RAM, you end up with the ability to use 8 CPU cores and 16 gigs of RAM.

Well, that's not really the case. You still have two separate 4-core CPUs, and two separately-addressable 8 gig portions of RAM.

Storage can sometimes be aggregated in a cluster, to a degree, but even there, you suffer a performance penalty and the complexity is much higher over just having one server with a lot more hard drives.

So that's not what a cluster is.

Instead, a cluster is a group of similar computers—or even in some cases, wildly different computers—that can be coordinated through some sort of cluster management to perform certain tasks.

The key here is that tasks must be split up to work on members of the cluster. Some software will work well in parallel. But there's other software, like games, that can only address one GPU and one CPU at a time. Throwing Flight Simulator at a giant cluster of computers isn't gonna make it any faster. Software like that simply won't run on any Pi cluster, no matter how big.

What a Pi cluster CAN do

Luckily, there's a LOT of software that does run well in smaller chunks, in parallel.

For example, right now in my little home cluster—which I'm still building out—I'm running Prometheus and Grafana on one Pi, monitoring my Internet connection, indoor air quality, and household power consumption. That Pi's also running Pi-hole for custom DNS and to prevent ad tracking on my home network.

This next Pi runs Docker and serves the website PiDramble.com.

And the next one manages backups for my entire digital life, backing up everything offsite on Amazon Glacier. (I'll be exploring my backup strategy in-depth and sharing my open source backup configuration later this year.)

I also have another set of Pis that typically runs Kubernetes (and hosts PiDramble.com, among other sites), but I'm rebuilding that cluster right now, so the other sites are running on a DigitalOcean VPS.

But there's tons of other software that runs great on Pis. Pretty much any application that can be compiled for ARM processors will run on the Pi. And that includes most things you'd run on servers these days, thanks to Apple adopting ARM with the new M1 Macs, and Amazon using Graviton instances in their cloud.

I'm considering hosting NextCloud and Bitwarden soon, to help reduce my dependence on cloud services and for better password management. And many people run things like Home Assistant on Pis to manage home automation, and there are thousands of different Pi-based automation solutions for home and industry.

But why a cluster?

But before we get to specifically why some people build Pi clusters, let's first talk about clusters in general.

Why would anyone want build a cluster of any type of computer? I already mentioned you don't just get to lump together all the resources. A cluster with ten AMD CPUs and ten RTX 3080s can't magically play Crysis at 8K at 500 fps.

Well, there are actually a number of reasons, but the two I'm usually concerned with are uptime and scalability.

For software other than games, you can usually design it so it scales up and down by splitting up tasks into one or more application instances. Take a web server for instance. If you have one web server, you can scale it up until you can't fit more RAM in the computer or a faster CPU.

But if you can run multiple instances, you could have one, ten, or a hundred 'workers' running that handle requests, and each worker could take as much or as little resources as it needs. So you could, in fact, get the performance of ten AMD CPUs split up across ten computers, but in aggregate.

Not everything scales that easily, but even so, another common reason for clustering is uptime, or reliability. Computers die. There are two types of people in the world: people who have had a computer die on them, and people who will have a computer die on them.

And not just complete failure, computers sometimes do weird things, like the disk access gets slow. Or it starts erroring out a couple times a day. Or the network goes from a gigabit to 100 megabits for seemingly no reason.

If you have just one computer, you're putting all your eggs in one basket. In the clustering world, we call these servers "snowflakes". They're precious to you, unique and irreplacable. You might even name them! But the problem is, all computers need to be replaced someday.

And life is a lot less stressful if you can lose one, two, or even ten servers, while your applications still run happy as can be—because you're running them on a cluster.

Having multiple Pis running my apps—and having good backups and automation to manage them—means when a microSD card fails, or a Pi blows up, I toss it out can have a spare running in minutes.

But why a Pi Cluster?

But that doesn't answer the question why someone would run Raspberry Pis in their cluster.

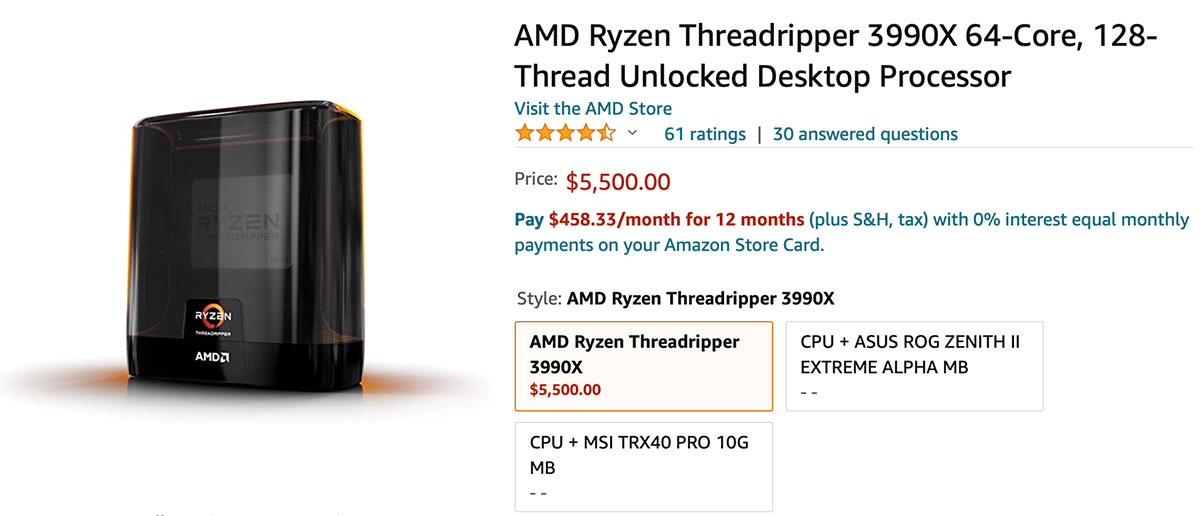

A lot of people questioned whether a 64-core ARM cluster built with Raspberry Pis could compete with a single 64-core AMD CPU. And, well, that's not a simple question.

First I have to ask: what are you comparing?

If we're talking about price, are we talking about 64-core AMD CPUs that alone cost $6,000 dollars? Because that's certainly more expensive than buying 16 Raspberry Pis with all the associated hardware for around $3,000, all-in.

If we're talking about power efficiency, that's even more tricky.

Are we talking about idle power consumption? Assuming the worst case, with PoE+ power to each Pi, 16 Pis would total about 100W of power consumption, all-in.

According to Serve The Home's testing, the AMD EPYC 7742 uses a minimum of 120 Watts—and that's just the CPU.

Now, if you're talking about running something like crypto mining, 3D rendering, or some other task that's going to try to use as much CPU and GPU power as possible, constantly, that's an entirely different equation.

The Pi's performance per watt is okay, but it's no match for a 64-core AMD EPYC running full blast.

Total energy consumption would be higher—400+ W compared to 200W for the entire Pi cluster full-tilt—but you'll get a lot more work out of that EPYC chip on a per-Watt basis, meaning you could compute more things, faster.

But there are a LOT of applications in the world that don't need full-throttle 24x7. And for those applications, unless you need frequent bursty performance, it could be more cost-effective to run on lower power CPUs like the ones in the Pi.

Performance isn't everything

But a lot of people get hung up on performance. It's not the be-all and end-all of computing.

I've built at least five versions of my Pi cluster. I've learned a LOT. I've learned about Linux networking. I've learned about Power over Ethernet. I've learned about the physical layer of the network. I've learned how to compile software. I've learned how to use Ansible for bare metal configuration and network management.

These are things that I may have learned to some degree from other activities, or by building virtual machines one one bigger computer. But I wouldn't know them intimately. And I wouldn't have had as much fun, since building physical clusters is so hands-on.

So for many people, myself included, I do it mostly for the educational value.

Even still, some people say it's more economical to build a cluster of old laptops or PCs you may have laying around. Well, I don't have any laying around, and even if I did, unless you have pretty new PCs, the performance per watt from a Pi 4 is actually pretty competitive with a 5 to 10 year old PC, and they take up a LOT less space too.

And besides, the Pis typically run silent, or nearly so, and don't act like a space heater all day, like a pile of older Intel laptops.

Enterprise use cases

But there's one other class of users that might surprise you: enterprise!

Some people need ARM servers to integrate into their Continuous Integration or testing systems, so they can build and test software on ARM processors. And it's a lot cheaper to do it on a Pi than on a Mac mini or an expensive Ampere computer, if you don't need the raw performance.

And some enterprises need an on-premise ARM cluster to run things like they would on AWS Graviton, or to test things out for industrial automation, where there are tons of Pis and other ARM processors in use.

Finally, some companies integrate Pis into larger clusters, as small low-power ARM nodes to run software that doesn't need bleeding-edge performance.

But there's no ECC RAM!

Another sentiment I see a lot is that it's "too bad the Pi doesn't have ECC RAM."

Well, the Pi technically does have ECC RAM! Check the product brief! The Micron LPDDR4 RAM the Pi uses technically has on-die ECC.

I'd say half the people who complain about a lack of ECC couldn't explain specifically how it would help their application run better.

But it is good to have for many types of software. And the Pi has it... or does it? Well, no—at least not in the same way high-end servers do. On-die ECC can prevent memory access errors in the RAM itself, but it doesn't seem to be integrated with the Pi's System on a Chip, so the error correction is minimal compared to what you'd get if you spent tons of money on a beefy server with ECC integated through the whole system.

Conclusion

So anyways, those are my thoughts on what you could do with a cluster of Raspberry Pis. I'm sure people will find a lot to nitpick, and that's perfectly fine.

Some people don't see the value or the fun in building clusters, just like some people couldn't fathom why someone would want to build their own chair out of wood using hand tools, when you can just buy a functional chair from Ikea or any furniture store.

But I will continue building and rebuilding Pi clusters (and clusters in AWS, and clusters on my Mac using VMs, and other types of clusters too!), and will continue enjoying the experience and learning process. What are some other things you've seen people do with Pi clusters? And have you built your own cluster of computers before, Raspberry Pi or anything else? I'd love to see your examples in the comments.

Comments

Very cool! Thank you for sharing this knowledge.

I also look forward to reading about your backup strategy. I believe that most people spend too little time thinking about backups. It’s all fun and games until one loses decades of photos. So I’m sure a lot of your readers (me included) can get useful inspiration from reading about your backup system.

Maybe I've been brain damaged from working in the IT industry for the better part of 20 years, but I find the Raspberry Pi to be a wonderful piece of technology. Even better, when it's clustered. Sure, the Pi lacks the oomph and the x86 architecture of conventional computers/servers, but who cares, when you can use it for so many different things, while learning and improving skills along the way without breaking your bank account? I haven't gotten around to building my own Pi-cluster (yet), so I am (just) running a home lab with a VMware cluster based on two Asrock Deskmini 310s with Intel I5s and 32 GB RAM each. But a Raspberry Pi cluster? Now that's my idea of fun! :)

Heh. You're not the only one, and NO, you're not brain damaged. Right now, I've got a rack filled with Pi's and a single 10th Gen i5 (It's handling the processing for my OTA tuner setup- the Pi4, it lacks the oomph to handle more than two channel streams...the i5 is overkill, but handles the NAS, the PVR, and 8+ streams for the houshold on OTA TV...)- the Pi4's are amazingly capable at handling the loads I'm throwing at them.

I think you missed an important why. That is as a Pi cluster is probably the cheapest cluster you could get it makes an ideal learning environment. And building such a cluster is a lesson that you probably wont get anywhere else.

Well, some people don't see the value in having a "non-production", capable of learning WITH Kubernetes cluster- even with CM4 blades from Uptime Lab, they're cheaper than the usable PC equivalent in size, power consumption, and...heh...even costs. Better yet, I found out that there's a single sytem image clustering system (Which is still relevant- it's a differing take on thigns than Containers and there respective clusters. Not all software can realistically be made to work in a clustering environment without major redesigns. SSI systems intend on making it possible and plausible to run most of that ON a cluster and do it mostly transparently with just a recompile with the modified LLVM toolset they provide. Popcorn Linux is supposedly available for use on X86 and aarch64 systems. Once I catch up a bit with my other projects (Just added MNN to the meta-edgeml Yocto layer last weekend...I have more to do including catching up a wireless support layer for all the various relevant WiFi USB chips from Realtek and others that're not in the 5.x kernels...) I'm kind of planning on trying to make a Yocto layer that peels that out and into an image for use on the Pi4/CM4 for this reason.

Why build a cluster? Because we CAN...and it can do real work ulitmately. Is it better than a production system. Hardly. Can you learn new things? Yep. Can you do it cheaper than with X86 hardware. Absolutely.

Re ECC RAM: It has to do with the ECC error correction ability. The people bemoaning it haven't separated, "enterprise," and, "personal"/"educational" which are two differing things. You've the potential without ECC being present in the RAM of errors corrupting operations, writes, etc. or outright crashing the system.

In practice...they don't get that they don't have ECC in their PCs even though, by their take they shoiuld- and that it's kind of EXPENSIVE to have ECC in there.

Hello, Jeff. Thank you for you great work. Can you help me with techniques how would you deploy OS to bare metal on to servers. As I understand you write memory cards for Raspberry but what should be right move for group of identical PC's? Ansible will be my second step but to put OS before installing Ansible not yet clear for me.

20 years ago, when we were using primitive clusters for CAD/CAE and High Performance Computing, we jokingly called them "RAIS". A play on RAID, it stood for Redundant Arrays of Inexpensive Servers. "Inexpensive" was relative, as the RISC servers we used ran $20,000+. Still, 30-40 of them beat $1M MiniSupercomputers for engineering analysis. Best of all, tools like Load Sharing Facility allowed us to use "spare" cycles on desktop workstations for added umph.

yes, 2 pis having 8 gigs or rams together don't make 16 gigs, but do they make 4 hdmi port, 8 usb ports, 2 CSI and DSI ports, and 52 GPIO pins?

oops, sorry. "8 gigs of ram", not "8 gigs or rams".

Heh, no problem :)

Can you buy a PI cluster with 8 PI 4s 8GB, with a cluster OS pre-installed??

I'm semi-experienced with Linux and want to get away from the bulky computer and go to something smaller but just as powerful to run my home network and home appliances.

Hello Jeff,

Excuse my ignorance I still don't get it. I have a raspi with a home automation server and another one running some local Web apps. What would a cluster give me that I may be missing? Apart from educational purposes of course

maybe you could cluster them up and if pi 1 has unused threads, and pi 2 is overloaded with tasks, the extra tasks on pi 2 will be moved to pi 1 as long as you have the necessary resources needed.

note that the task running on pi 1 can't access the resources on pi 2.

but if the resource is an ssd, pi 1 can ssh into pi 2 and the ssd will be mounted as a network drive

I use gluster for this. I have a folder which is replicated to all pi's which contains data volunteers for my containers. It doesn't matter where a container spins up, it always has access too it's data.

what's a gluster?

Like he wrote, it could provide redundancy.

So in case one of the Pis fails the others could continue executing the software.

i have a few doubts on clustered computing:-

1. does giving poweroff on the shell of the master node also shutdown all the workers?

2. does giving the power on signal to the master node power on all the others?

3. if a task associated with gpio, pcie or usb is assigned on node x, will it run ONLY on node x? (node x can also be the master node)

4. do sudo apt install/remove instructions run on all workers?

(using raspberry pis running ubuntu without kubernetes)

You typically need some sort of orchestration / management to interact with a cluster.

For example, I use Ansible to manage my clusters, and shutting everything down is a matter of running the command:

Ansible reaches out to each computer and runs the command

shutdown nowassudoand then exits, and all the Pis shut themselves down cleanly.in this video i saw that the pis were having the os and simply ran as a cluster ----> https://www.youtube.com/watch?v=PUAIIibXMYw