This is the fourth video in a series discussing cluster computing with the Raspberry Pi, and I'm posting the video + transcript to my blog so you can follow along even if you don't enjoy sitting through a video :)

In the last episode, I showed you how to install Kubernetes on the Turing Pi cluster, running on seven Raspberry Pi Compute Modules.

In this episode, I'm going to show you some of the things you can do with the cluster.

A brief introduction to managing Kubernetes

In the last episode I explained in a very basic way how Kubernetes works. It runs applications on your servers. To help manage Kubernetes, there is a handy little tool called kubectl (sometimes I call it cube control, but if we go down that road I have to start talking about why it's called a 'gif' and not a 'jiff', and why spaces are better than tabs, or vim is better than emacs, and I don't want to go there!).

I won't go into a deep dive on managing Kubernetes in this series, because that could be its own series, but, I will be using kubectl a lot, to deploy apps to the cluster, to check on their status, and to make changes.

Pi-Particular Pitfalls

And just as a warning, there may be a few parts of this blog post that are a little over your head. That's okay, that's kind of how it is working with Kubernetes. The only way to learn is to build test clusters, make them fail in new and unexpected ways, then start over again!

I like to think of it like every second you're working right at the edge of your understanding. When it works, it's magic, when it doesn't... well, good luck!

No, I'm just kidding—I'll try to help you understand the basics, and once you start building your own cluster, hopefully you can start to understand how it all works.

One of the most frustrating things you run into if you're trying to do things with Raspberry Pis (or most any single board computer like the Pi) is the fact that many applications and container images aren't built for the Pi.

So you end up in a cycle like this:

- You think of some brilliant way you could use the Pi cluster.

- You search for "How to do [brilliant thing X] in Kubernetes or K3s"

- You find a repository or blog post with the exact thing you're trying to do.

- You deploy the thing to your Pi cluster.

- It doesn't start.

- You check on the status, and Kubernetes says it's stuck in a

CrashLoopBackOff - You check the logs, and you see:

exec user process caused "exec format error"

This happens more often than I'd like to admit. You see, the problem is, most container images like the ones you see on Docker Hub or Quay are built for typical 'X86' computers, like for the Intel or AMD processor you're probably using on your computer right now. Most are not also built for 'ARM' computers, like the Raspberry Pi.

And even if they are, there are many different 'flavors' of ARM, and maybe it's built for 64-bit ARM but not for 32-bit ARM that you might be running if you're not on the 64-bit version of Raspberry Pi OS!

It can be complicated. Sometimes if you really want to do something and can't find a pre-built image that does what you want, you'll have to build your own container images. It's not usually too difficult, but especially when your quickly testing a new idea, where other people may have already done the work for you, but you can't use it because it's not compatible, it can be a bit frustrating.

To illustrate: let's look at one of the simplest 'Hello world' examples from Kubernetes' own documentation: Hello Minikube.

If I create the hello-node deployment with kubectl create deployment hello-node --image=k8s.gcr.io/echoserver:1.4, then check on its status using kubectl get pods, after a couple minutes I'll see the pod in CrashLoopBackOff state.

And if I check the pod's logs with kubectl logs I get—you guessed it—exec format error.

The Venn Diagram

And not to be discouraging, but sometimes when you want to solve a problem with Kubernetes, on a cluster of Raspberry Pis, you're kind of inflicting pain on yourself—imagine this scenario:

You want to run a minecraft server on your cluster. Now think about all the other people in the world who run Minecraft servers. How many of these people not only run a Minecraft server, but run it in a Docker container... in a Kubernetes cluster... on a Raspberry Pi, which might be running a 32-bit operating system!?

The amount of people who are doing the same thing you're trying to do is usually pretty small, so like an early explorer, you may have to do some extra work to get things working! Google is your friend here, because often there are one or two other people who are doing the exact same thing, and finding their work can help you a lot.

Running some Applications

Now I'll show you what I deployed to my Turing Pi cluster and how I did it.

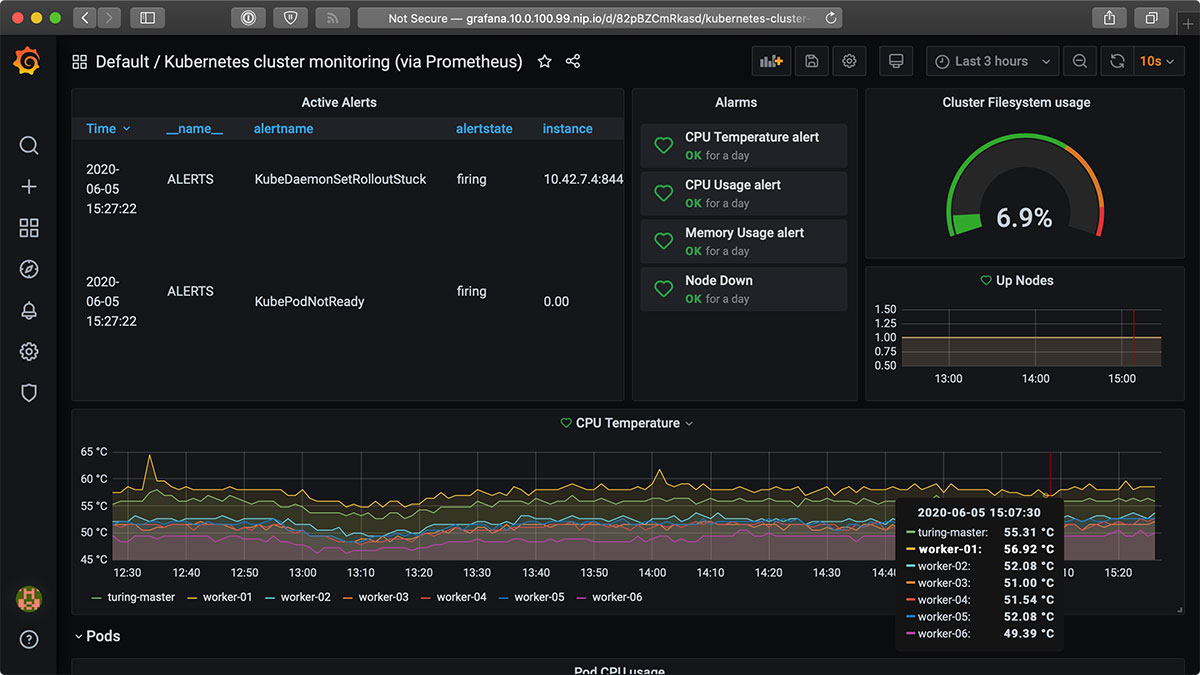

Prometheus, Grafana, and AlertManager

The first thing I like to do with my Kubernetes cluster is make sure I have something monitoring the cluster. The most common tools used for this purpose are Prometheus and Grafana.

I initially tried to run the kube-prometheus project that's maintained by the CoreOS organization on GitHub, but after I followed the Quickstart guide, I ended up getting the dreaded:

exec user process caused "exec format error"

I opened an issue on GitHub for that problem, because I believe the project should work on 32-bit ARM OSes, but apparently the kube-rbac-proxy image currently isn't. So then I started changing configurations to try to get it working on my cluster, but while I was doing that, I found an awesome project by Carlos Eduardo that already had done everything I wanted to do, but better!

So I downloaded Carlos' cluster-monitoring project to the Pi and deployed it instead:

- Log into the master Pi:

ssh pirate@turing-master - Switch to the root user:

sudo su - Install prerequisite tools:

apt-get update && apt-get install -y build-essential golang - Clone the project:

git clone https://github.com/carlosedp/cluster-monitoring.git - Edit the

vars.jsonnetfile, tweaking the IP addresses to servers in your cluster, and enabling thek3soption andarmExporter. - Run

make vendor && make(this takes a while) - Run the final commands in the Quickstart for K3s guide to

kubectl applymanifests, and wait for everything to roll out.

Once everything has started successfully, you should be able to access Prometheus, Alertmanager, and Grafana at the URLs you can retrieve via:

kubectl get ingress -n monitoring

The Cluster Monitoring project includes a really nice custom dashboard for Pi-based clusters that even shows the CPU temperature and alerts you if the Raspberry Pis are too hot and could be throttled!

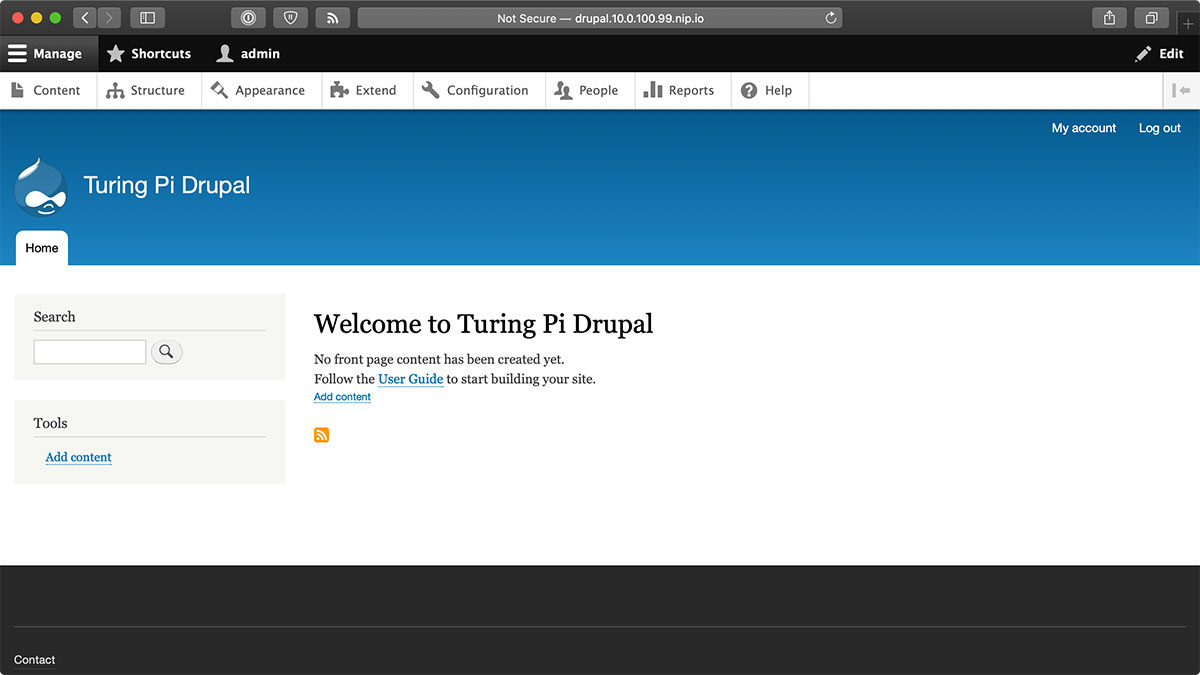

Drupal

Now, monitoring the cluster is great, but if you're not running anything on the cluster, what use is monitoring?

I'm pretty familiar with Drupal, since it was one of the first open source projects I started working with, when I built my first major website over a decade ago.

It's a pretty flexible Content Management System, and is great for building big websites. It can run on Kubernetes, but is a little heavyweight, so let's see if we can get it to run on our Compute Module nodes with 1 GB of RAM each.

I have a barebones Drupal in Kubernetes K3s on Raspberry Pi configuration, which uses a couple 'Kubernetes manifest' files.

Manifests describe one or more Kubernetes resources that help you run an application.

In this case, I downloaded both the drupal.yml and mariadb.yml files to my Turing Pi, in a drupal folder, then I ran:

kubectl create namespace drupal

And then applied the contents of the manifest to my cluster:

kubectl apply -f drupal.yml

kubectl apply -f mariadb.yml

And then ran watch kubectl get pods -n drupal to watch as the Pods for Drupal and MariaDB were started up.

The way these manifests work is there is an 'Ingress' resource that tells Kubernetes to accept requests for the hostname drupal.10.0.100.99.nip.io. Those requests should be routed to the 'Service' named drupal on port 80. The drupal Service then directs requests to containers that are part of the drupal 'Deployment'. And the 'Deployment's containers have a 'PersistentVolumeClaim' that allows the containers to store data permanently so if the Drupal container dies, the files will still be accessible after Kubernetes launches a replacement.

Things may be a little over your head, but that's okay. Kubernetes is like that, and you really have to experiment and be willing to accidentally break your cluster a lot, then rebuild it, before you start getting the hang of all the resources you have to deploy in Kubernetes, and how they are tied together.

In the end, I accessed the hostname drupal.10.0.100.99.nip.io in my browser, then went through Drupal's install UI, and finally got a fresh new Drupal website running on my cluster!

Wordpress

Drupal is a pretty popular CMS that powers many of the world's largest websites, but a similar application that's also built with PHP and uses a database is Wordpress.

I also have a very basic Wordpress in Kubernetes K3s on Raspberry Pi configuration, and it looks very similar to the Drupal configuration.

Following the same steps as with the Drupal manifests, I downloaded the manifests and applied them to the cluster. After a couple minutes, the Wordpress and MariaDB Pods were up and running, and I visited the Wordpress hostname wordpress.10.0.100.99.nip.io.

The installation was much faster, as Wordpress doesn't require as many resources as Drupal to install itself on your server, and a minute or so later, I had a Wordpress website also running on my cluster!

Minecraft

Now, running websites on the cluster is great, and you could even consider hosting a website that's accessible to the Internet, but I like to have fun with my Raspberry Pis, and one fun game that you can enjoy with your friends either on the same home network or over the internet is Minecraft.

Minecraft has a server you can download and run, and lucky for us, there's a Helm chart available for it.

What's Helm, you ask? Well, many people manage applications using raw YAML manifest files like we did for Drupal and Wordpress. Some people use tools like kustomize or Ansible to template manifests and manage application deployments in Kubernetes or K3s. Helm is a widely used tool to do the same thing, and there are pre-made Helm 'Charts' available to install almost any popular software you might know of. They might not always be the right fit, but Helm Charts are often the quickest way to try new things in a Kubernetes cluster.

To get started with Helm, you have to install it. I did that on the Turing Pi master node using the Helm install guide, and you just have to download the right binary—arm for a 32-bit OS like HypriotOS, or arm64 for a 64-bit OS—and then move the downloaded helm binary into the /usr/local/bin folder.

For minecraft, there's a semi-official minecraft Helm chart in the 'stable' Helm repository, so to use it, you have to add that repository to Helm:

helm repo add stable https://kubernetes-charts.storage.googleapis.com

Then create a namespace to hold Minecraft and its resources:

kubectl create namespace minecraft

Kubernetes namespaces are great for isolating different applications. Drupal and Wordpress have databases that are only accessible within their own Kubernetes namespace (though you could configure them to be accessible outside as well). And namespaces are easy to delete if you mess one up; deleting a namespace deletes everything inside so you don't have to try cleaning up a bunch of Kubernetes resources in the default namespace!

Now, we can use Helm to create a minecraft server instance in the cluster, but first, we need to create a minecraft.yaml file with the 'values' that Helm will use for our particular cluster—some of these values are required to make Minecraft server run better on a lightweight CPU like the one in the Compute Module 3+ that I'm using in the Turing Pi cluster. So create minecraft.yaml with the contents:

---

imageTag: armv7

livenessProbe:

initialDelaySeconds: 60

periodSeconds: 10

failureThreshold: 180

readinessProbe:

initialDelaySeconds: 60

periodSeconds: 10

failureThreshold: 180

minecraftServer:

eula: true

version: '1.15.2'

Difficulty: easy

motd: "Welcome to Minecraft on Turing Pi!"

# These settings should help the server run better on underpowered Pis.

maxWorldSize: 5000

viewDistance: 6

And then run the following command to deploy the Helm chart:

helm install --version '1.2.2' --namespace minecraft --values minecraft.yaml minecraft stable/minecraft

Minecraft server takes a while—up to 15-20 minutes on the Compute Module 3+!—to generate it's world, and you can monitor that progress by running:

kubectl logs -f -n minecraft -l app=minecraft-minecraft

After a little while, you should see a message like:

[12:25:47] [RCON Listener #1/INFO]: RCON running on 0.0.0.0:25575

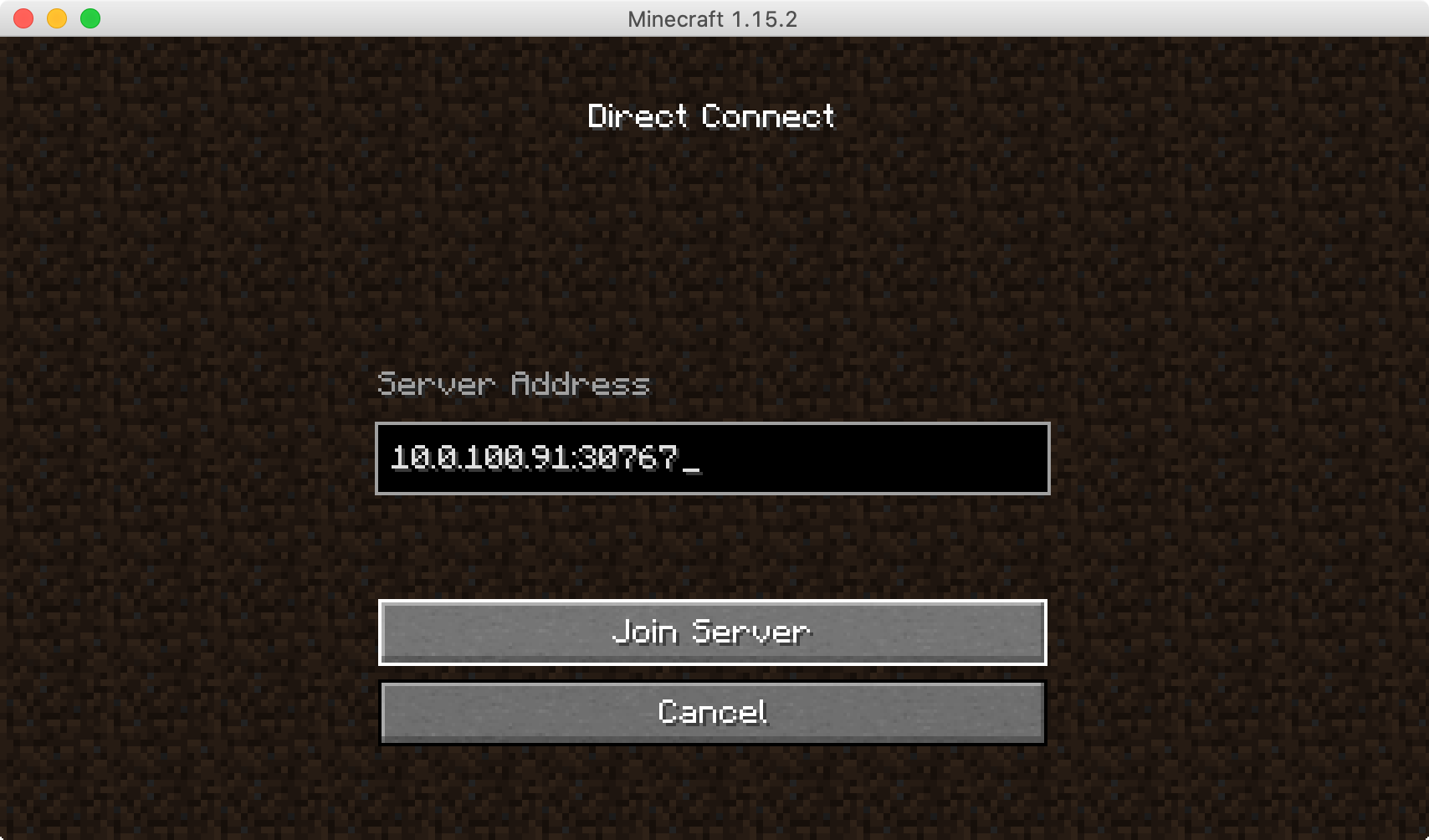

And that's when you can connect to the server; open up Minecraft on your Mac, PC, or whatever other device, use Multiplayer and then Direct Connect, and connect to the server on the Turing Pi cluster. The server should be something like:

10.0.100.70:30767

You can get the IP address (EXTERNAL-IP) and port (PORT, the part between the : and /TCP) using this command:

kubectl get service -n minecraft

And now you can start playing Minecraft with your friends!

The Raspberry Pi Compute Module 3+ isn't the best Minecraft server in the world, even though it works... you may want to consider running Minecraft server on a Pi 4 or another faster computer with more RAM available. I'll get more into benchmarking in the next Turing Pi cluster episode!

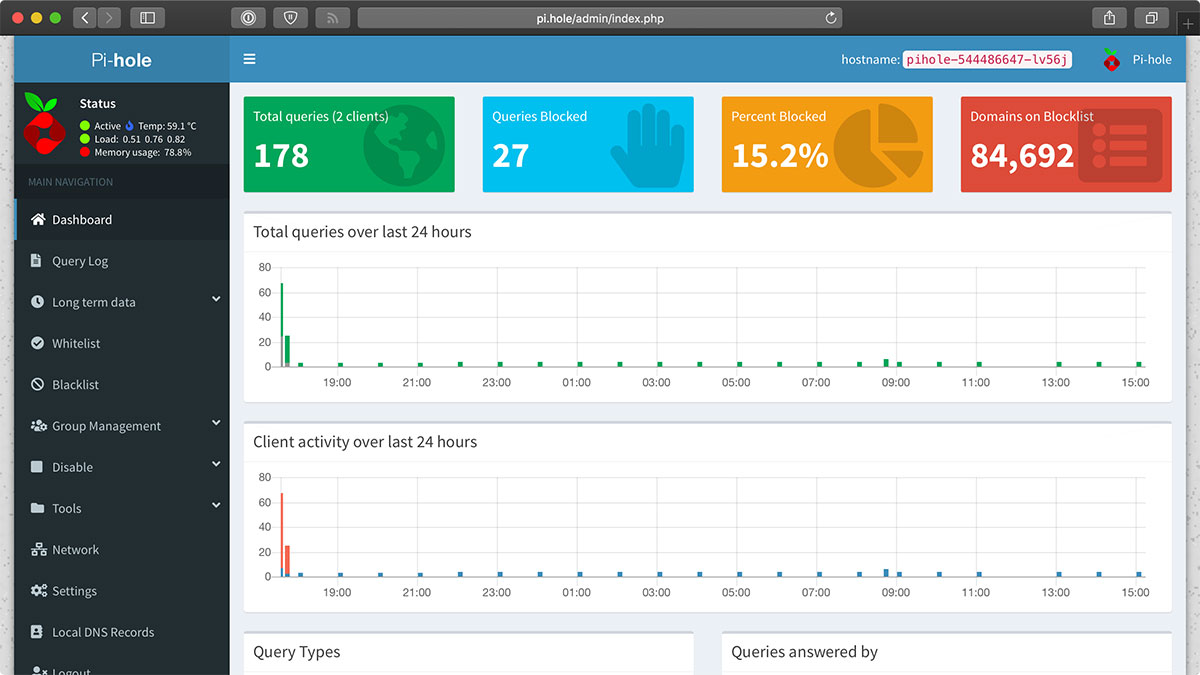

Pi-hole

Now there's one other tool I like to run in my house to help blocking ads or other unwanted content, and also to allow me to set up some custom DNS rules for different devices like Raspberry Pis that I use around the house.

That tool is Pi-hole, and it's pretty easy to get installed—especially since there's a Helm chart that does all that work for you!

GitHub user Christian Erhardt maintains a pihole Helm chart in a custom Helm repository, so the first step is to add that custom repository:

helm repo add mojo2600 https://mojo2600.github.io/pihole-kubernetes/

Now create a namespace inside which you can deploy Pi-hole:

kubectl create namespace pihole

As with Minecraft, there are a few values we need to override to get Pi-hole working correctly on the Turing Pi cluster, so create a values file named pihole.yaml and put the following inside:

---

persistentVolumeClaim:

enabled: true

ingress:

enabled: true

serviceTCP:

loadBalancerIP: 'LOAD_BALANCER_SERVER_IP_HERE'

type: LoadBalancer

serviceUDP:

loadBalancerIP: 'LOAD_BALANCER_SERVER_IP_HERE'

type: LoadBalancer

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 128Mi

# If using in the real world, set up admin.existingSecret instead.

adminPassword: admin

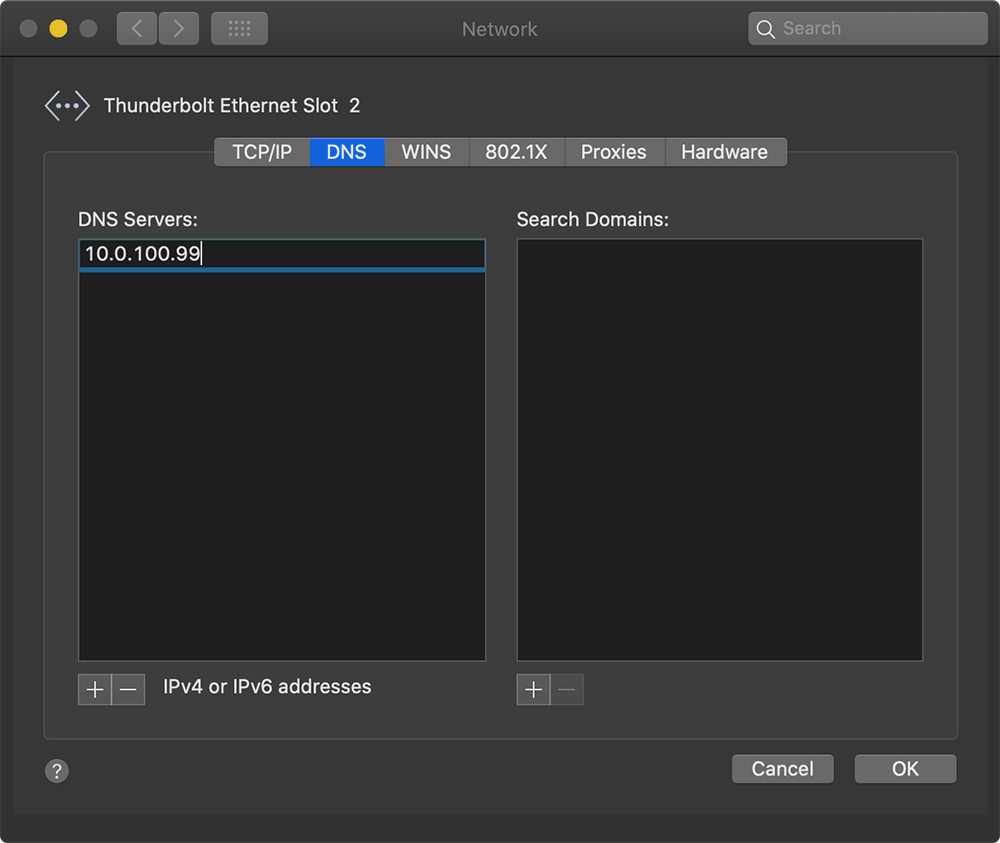

Substitute the IP address of one of your Pi worker nodes for the LOAD_BALANCER_SERVER_IP_HERE (e.g. 10.0.100.99).

Then use Helm to install Pi-hole:

helm install --version '1.7.6' --namespace pihole --values pihole.yaml pihole mojo2600/pihole

After a couple minutes (check on the progress with kubectl get pods -n pihole), Pi-hole should be available, and if you edit your computer's /etc/hosts file and add a line like 10.0.100.99 pi.hole, then you can access the web UI (and log in with password 'admin') by visiting http://pi.hole:

On my Mac, I can now change the DNS server in my Network System Preferences to the IP address 10.0.100.99, and now Pi-hole will start serving DNS for my Mac, and blocking ads and trackers however I configure it!

There's a lot more you can do with Pi-hole, so go to read the Pi-hole documentation for more!

Summary

So those were just a few of the things you can run on a cluster.

But throughout my time working on these different deployments, I learned a lot about the limitations of the Compute Module 3+, so I'm looking forward to the Compute Module 4, which is coming out later this year. It should be a lot faster, and hopefully, it will have options with more RAM!

And it would be crazy for me to do all this work, and not share it with you. Time and again, you have been generous in supporting me, like on Patreon and GitHub Sponsors (and if you don't already support my work there, please consider doing it!).

So to give back, I've been secretly maintaining a repository for the Turing Pi Cluster project, which is still a work in progress, but will quickly, and automatically, bootstrap ALL the apps that I just showed in this video, on your own cluster, using Ansible. It doesn't even have to be a Turing Pi cluster! You can run it on any kind of ARM cluster. And today, I released that project as open source code on GitHub, so you can use it as you like; here it is: Turing Pi cluster configuration for Raspberry Pi.

In fact, I ran this automation on my own Raspberry Pi Dramble cluster, which has four Raspberry Pi 4's with 2 GB of RAM each, and then I did a bunch of benchmarks on the two clusters, to see how they perform.

The results were surprising—in many cases, the Pi Dramble cluster ran things twice as fast as the Turing Pi cluster! There are a number of reasons for this, but the main one is the Pi 4 is overall a much better and faster computer than the Compute Module 3+.

But how much better? And does the new 64-bit Raspberry Pi OS change anything? And what are my thoughts on the Turing Pi after having done all of this? Well, to find out, subscribe to my YouTube channel. In the next video, I'm going to give a more thorough review of the Turing Pi itself. And please comment below if you have any questions about the Turing Pi, K3s, or clusters in general. I will do a follow-up video with questions and answers soon!

Comments

I'm still fairly new to Ansible and K8s, but is there a reason why you used the community helm module instead of the one within ansible? I see that you've made contributions to the one in community, so have you just tailored that module to how you like things to operate and use that one instead for that reason?

The one within Ansible has not been maintained for a couple years and does not work at all with Helm 3 (and barely worked with Helm 2). The one in the

community.kubernetesrepository is the only version of the module that is maintained now, and I highly recommend anyone using Helm switches to that version.I followed your your YouTube video and everything works fine, but how do I access this link from my Macintosh?

http://prometheus.192.168.1.42.nip.io

My Mac cannot find that address? Do I need to install anything on my Mac to see nip.io on my k3s cluster?

Thank you.

I figured it out.

My make had the DNS of my router 192.168.1.1, but when I changed it to 8.8.8.8 all worked OK.

Thanks

Hi,

Jeff you're a hero.

I've been having the same as your original issue. Is someone able to explain and walk me through how to resolve the .nip.io address when trying to resolve on my external host (macbook) the grafana / prometheus url from the get ingress cmd output. Everything is running perfectly up until the moment I'm trying to resolve the URL nip.io.

Please!

Just following your excellent series on k3s clustering on the Turing Pi (thanks a lot for the big effort & sharing!) and tested HypriotOS lately on my own cluster, and man , falled in love immediately with the cloud-init configuration stuff !

However being a 32-bit OS is a clear show-stopper for me (I use Amazon's Corretto JDK aarch64 and some Docker arm64 images too) , and reading last statements on their Github issues tracker it seems they're not planning a 64-bit version any time soon.

At this point, would you recommend moving to another 64-bit distro (if possible both kernel + userland on 64bit) ?

I've seen another distro named BalenaOS (from the etcher guys) claiming 64-bit and like HypriotOS tuned for k8s + Docker (https://www.balena.io/blog/balena-releases-first-fully-functional-64-bi…).

I've been also considering (just for fun) recompiling a kernel for ArchLinux ARM 64-bit with some kernel modules support to get a more or less clean sheet from check-config (https://github.com/moby/moby/blob/master/contrib/check-config.sh)

Any thoughts on this :D ?

I've been doing a lot of testing with the Raspberry Pi 64-bit OS. The one thing it lacks that I miss a lot is cloud-init, but apparently that's something you can get with Ubuntu 20.04 for Pi, so I might try that out next.

Hi there,

I have similar question as Michael Ventarola. All works fine if DNS on the mac is set to 8.8.8.8. But I can't reach any of ingress hosts when using my router as DNS.

I'm sure the solution is simple, but I just can't figure it out.

Your router's own DNS might be using a DNS server that doesn't work correctly with nip.io / localhost routing. Some ISPs don't serve DNS that well for developer services :(

I'm using PiHole/Tor on another RPI as DNS in my network with my router pointing to that. I'm wondering how it would behave if I will teleport the Pihole/Tor to the cluster.

Ouh man, what an inspiration you're. I was watching your last videos, build a cluster with 4 RPI 4+ and I didn't have so much fun for years. Thank you for that

I ran into the same issue, and the reasons for failure are good. The reasons for failure are good, because private (RFC 1918) IP address range is blocked from resolution from outside DNS for security reasons, and I do not think we should challenge that behaviour.

I suggest you to add a hint to put the RFC1918 ranges (that I expect everyone following these tutorials is using), namely 192.168/16, 172.16/12 and 10/8 address ranges, into /etc/hosts file, to circumvent.

Even better, I suggest to over-come your paradigm that IP addresses were meant to be edged into stone (and thus, turn the life's of infrastructure and network operations teams into a burning hell), and put a FQDN to the master node. You can remove the IP address, and the nip.io domain name, and just replace with the master name, and then put the result of the "kubectl get ingress -n monitoring" output into /etc/hosts, and skip all the nip.io magic and the troubles it creates.

Having said so: Your tutorials rock! I followed them to have a now running Turing PI cluster, with 3 extra nodes. Your mix of Ansible with Kubernetes is just inspiring!

Hi, how did your hosts file look like after you added 192.168/16?

Hi there,

you're using a loadbalancer in the pihole deployment. I don't have one (yet). Do you maybe have a article or video on how to setup loadbalancer ? I saw one video, showing nginx loadbalancer in action, but can't find the way how set it up.

thanx a lot

K3s sets up a loadbalancer that can consume node IPs and balance requests internally in the cluster. Otherwise you'd need to work with an external load balancer or use something like MetalLB.

So actually to deploy pihole in k3s in need external loadbalancer, so I can provide its IP in pihole.yaml, as described in your videop

hi there,

Just found "Substitute the IP address of one of your Pi worker nodes for the LOAD_BALANCER_SERVER_IP_HERE (e.g. 10.0.100.99). "

I was probably too tired yesterday :)

Hello Jeff,

Another question I have. After I deployed k3s and pihole using helm I see four TCP pods in pending state. I was digging and found some info about pihole conflicting with traefik which come out of the box with k3s. Wondering did you experience similar behaviour, or did I miss some steps during k3s/pihole installation?

I think I hit the same thing, but since DNS uses UDP that didn't cause any issues with the way I was using PiHole to block traffic on my network.

I have the same issue with NIP.IO. I have attempted to build with hypriot, raspbian 64bit and ubuntu 20.04 (which I prefer due to less desktop baggage vs. raspgian 64bit) and all three fail with nip.io. I don't know if the issue is with my pfsense router or the firewalla blue or my local dns. Regardless is there another solution? I don't know enough but my guess is this is related to ingress processing. I have looked at the nip.io web site and it is less than helpful. I more detailed description of whatever nip.io is doing would enlighten muchly.

My understanding of nip.io is that it allows wildcard local DNS resolution, so hostnames such as foo.192.168.1.1.nip.io and bar.192.168.1.1.nip.io can be used for servers running on your cluster. Both names resolve to the IP address 192.168.1.1 and the routing of any requests is done internally by parsing the hostname specified in the request. So when you enter foo.192.168.1.1.nip.io into your browser, first the name is resolved to the local IP address, then the http request is parsed and forwarded to the appropriate server - the one called "foo" in this example.

You can resolve local hostnames yourself by adding entries to your /etc/hosts file but it isn't possible to use wildcards so you would need an entry for every server (foo, bar in this example).

That said, I too have problems getting nip.io names to resolve. My network uses Pi-hole (running on dedicated hardware) to block certain sites and at first I thought the nip.io domain was being blocked, so I whitelisted it but the problem remained. nslookup requests simply went unanswered so it appears they are being blocked by upstream DNS servers. Most Pi-hole users use one of the upstream DNS servers suggested on the configuration page of the Pi-hole web GUI, such as Google, Quad9 or Cloudflare. I was running my own recursive DNS server using unbound ( https://docs.pi-hole.net/guides/unbound/ ) on port 5335 of the same hardware and there's something about my configuration (maybe a privacy setting) that seems to block nip.io DNS requests. Changing my upstream DNS server from localhost#5335 to one of the commercial servers fixes the nip.io resolution problem. I'd prefer to use unbound though, so I'll have to spend more time troubleshooting.

I had no clue what nip.io was. I assumed it was just an example. So basically I changed domain name to the name that devices resolve to on my local network. Then I configured, in my case, DNS server to resolve grafana.my.domain to be the IP address of my kubernetes control-plane server. Alternatively, someone above mentioned manually putting name in /etc/hosts file or C:\Windows\System32\drivers\etc\hosts file on windows. Again, add an entry for the 3 servers (grafana.my.domain, prometheus.my.domain, etc..) where my.domain is the domain you changed in the config file. If you don't want to change the domain, you can still add entries in the host file using the nip.io name, but still pointing it to the IP address of the kubernetes control-plane server. As I am running a BIND/NAMED DNS server I just added the entries there. Hope that helps.

i followed all the instructions for the minecraft server. When i run the kubectl get svc --namespace minecraft -w minecraft-minecraft command. it looks like it deploys fine but the external IP gets stuck on pending. I left it running over night in the morning it was still stuck on pending. any suggestions as to how to fix this? Thank you for your time.

Spoiler: when I was attempting to install pihole via helm I got the following error:

"Error: Kubernetes cluster unreachable: Get "http://localhost:8080/version?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused"

It took me a little too long to realize that the cluster management software was running on port 6443, not port 8080. I figured this out by looking in .kube/config.

To fix it, you can specify the kube configuration file in helm during cahrt installation by using the --kubeconfig tag

To fix this I just ran sudo cp /etc/rancher/k3s/k3s.yaml ~/.kube/config and changed owner:gid to match the user running kubctl and finally changed the mode to 0600.

or do this

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

For the IP for PiHole, how come for the load balancer IP, why do we use a worker's IP. Why don't we use the master node?

Also for minecraft I am unable to get am external IP address.

I would consider asking on the Turing Pi Cluster project (https://github.com/geerlingguy/turing-pi-cluster)—I often don't have the time to respond directly on the blog when it comes to specific code in one of my repos. Also, I've updated a few things in that repo from what's stated in the video (which I can't change over time :).

Hi Jeff,

Today I was trying to install minecraft on my Raspberry Pi 4 dramble cluster. While trying to run:

> helm repo add stable https://kubernetes-charts.storage.googleapis.com

I got the following error:

Error: repo "https://kubernetes-charts.storage.googleapis.com" is no longer available; try "https://charts.helm.sh/stable" instead

so I believe this repo has been deprecated. So I tried the following command with success:

> helm repo add stable https://charts.helm.sh/stable

"stable" has been added to your repositories

In addition, the minecraft chart in the stable repository is deprecated and has been moved; see https://github.com/geerlingguy/turing-pi-cluster/issues/28

I can confirm adding the itzg repository and changing imageTag to multiarch worked for setting this on Ubuntu 20.04 (arm64 rpi 4). I also had to setup kubeconfig to get helm to install. I had to sudo cp /etc/rancher/k3s/k3s.yaml ~/.kube/config, change owner:gid of file to match the user running kubectl, and change the mode of the file to 0600. The command that was given to find out the port number of the minecraft server did not work. In order to allow connectivity from outside the cluster I had to run a kubectl port-forward command.

Hi Jeff

Excellent series on k3s and you are my new “go to guy” for this kind of thing.

One question I have failed to answer with a day of googling and playing is how to correctly backup and restore a persistent volume in k3s. I found about VolumeSnapshots but It seems not to be available in k3s. How do people usually do this?

The reason for wanting this is that last weekend, I built a nice 5 node pi4 cluster each booting off an SSD (thanks for that video as well) an today I found my Prometheus not starting because of a segmentation violation. A bit of googling pointed me to Prometheus not being too good on 32bit so I took one of the nodes out of the cluster (this feels like real grown-up work), installed 64bit, labelled the node as being 64bit, set the affinity of the Prometheus to the 64 bit node and let k3s try to bring it up. It failed because the persistent volume is on the old 32bit node and the pod is trying to come up on the 64bit node and the want to be together :-).

I suppose ideally I would like to be able to migrate a state full set from one node to another but I think I might be asking too much.

Keep up the good work.

Hi Jeff,

after tearing down a couple times my setup now I have a master (Raspberry Pi 4 4Gb Ram and RaspOS 64 bit) and 5 workers on Turing pi. I set up ansible and cluster monitoring but what I have now is a master node with an average use of the cpus at 80% with load average : 10.17 9.43 9.59 that seams to me a lot for monitoring almost nothing.

Is it normal or do I have to investigate ...... and where?

TIA and keep going!

M.O.

Looks like I've run into my first `CrashLoopBackOff` error. I was able to get `cluster-monitoring` working (sort of, all pods running but no metrics) in a previous iteration of provisioning these pi's.

For some reason, a lot of the pods are failing for me now, I wonder what I did differently this time to cause this behavior. Additional info for anyone curious can be found here: https://github.com/carlosedp/cluster-monitoring/issues/91#issuecomment-…

Jeff, I really enjoy your vids, especially as you don't begin with "Hey what's up guys?" :)

My question is, do I really have to use nip.io addresses if I'm only working locally? I got as far as the ingress bit then hit a brick wall with nip.io etc.. I found myself looking up PowerDNS, mysql, LAMP, a Docker account, I mean?. Can I not just omit the nip.io from the suffixDomain in the vars.jsonnet for some words of wisdomconfig file? Ok I should suck it and see! I know :(

I tried adding 8.8.8.8 to the URI and it does resovle to the local address but cannot locate fuctions such as Graph?

Hoping for some words of wisdom

Dave

I was waiting for the part that you would show how to expose the apps externally in cases with public static since you were showing deployment of drupal and wordpress. would not hurt to hint a beginner right?

I subscribed btw.

Hi

Is there a way to remove nip.io from this tutorial? I can't make it work and I think I don't need it......

After kubectl get ingress -n monitoring, it gives me this:

grafana. grafana.192.168.2.61.nip.io 192.168.2.64. 80,443

192.168.2.61 is the master IP

192.168.2.64 is a worker IP

Having Kubernetes is good for fail over however if the Raspberry pi was rebooted due to power failure; microk8s does not restart (as reported by many users and as per my tests) and you have to restart it manually which makes installing the pi-hole natively (without microk8s) a better choice (I understand that it will be the only application installed however it makes more sense to only install pi-hole especially if you are using a Pi3). Please correct me if I am wrong, and many thanks for your efforts and elaborations.

Hey, thanks for all the blog posts and youtube videos you made. It really helps me to get my feet into the business.

I have some questions regarding the setup of your pi's. I flashed the images and now I have the user pirate with password "hypriot".

So when I log in to the pis I have to use them. Therefore I extended the "k3s-ansible" - "sample/group_vars/all.yml" file with two additional keys [ansible_connection, ansiable_ssh_pass] so I was able to run ansible. Did you put ssh keys on your devices first?

Furthermore I ran the "cluster-monitoring" repository. I get to the point where I can use "kubectl get ingress -n monitoring" and I do not see any errors like you did with prometheus. However if I try to get to one of the services like alertmanager, grafana, prometheus. I just get the message site can not be reached. Any recommendations regarding this error? I already played it throught multiple times, always failing when watching the sites.

Hi Jeff,

I am a Subscriber to your Youtube channel.

Came across something interesting that probably you are aware - perhaps you alr hv a video on this: Raspberry Pi CM4 Powered Blade Server by Ivan Kuleshov.

I actually have an early prototype and am hoping to do a video on that server soon!

Use arm_64bit=1 in config.txt, then kube-prometheus works.

Hi Jeff,

I am still new with K3S. I tried to install pihole with your instruction, however, after I run the command "helm install --version '2.4.2' --namespace pihole --values pihole.yaml pihole mojo2600/pihole", then I got the error message as "Error: INSTALLATION FAILED: Kubernetes cluster unreachable: Get "http://localhost:8080/version?timeout=32s": dial tcp [::1]:8080: connect: connection refused". Could you please advise how I can fix this problem?

Thanks,

Dennis

Hi Jeff and all,

I manage to fix this problem already. Now I can install PiHole but cannot access to the pihole page. My load balancer is 10.10.26.21, but whenever I try to access, it said page not found. Is there any suggestions from you?

Thanks,

Dennis.

Do you solved this? Im having exactly the same problem

I am having the same issues also. Was this resolved?

Jeff, I have an issue applying the drupal.yml file. Any idea what is wrong here - error: unable to recognize "drupal.yml": no matches for kind "Ingress" in version "extensions/v1beta1"

pi-master:/drupal# kubectl create namespace drupal

namespace/drupal created

pi-master:/drupal# kubectl apply -f drupal.yml

configmap/drupal-config created

persistentvolumeclaim/drupal-files-pvc created

deployment.apps/drupal created

service/drupal created

error: unable to recognize "drupal.yml": no matches for kind "Ingress" in version "extensions/v1beta1"

pi-master:/drupal# kubectl apply -f mariadb.yml

persistentvolumeclaim/mariadb-pvc created

deployment.apps/mariadb created

service/mariadb created

pi-master:/drupal# nano drupal.yml

pi-master:/drupal# kubectl get pods -n drupal

NAME READY STATUS RESTARTS AGE

mariadb-58db88d5-cbnpb 1/1 Running 0 4m50s

drupal-54d49d68d4-rvtpd 1/1 Running 0 6m1s

pi-master:/drupal# kubectl get pods -n drupal -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mariadb-58db88d5-cbnpb 1/1 Running 0 5m38s 10.42.2.16 pi-agent0

drupal-54d49d68d4-rvtpd 1/1 Running 0 6m49s 10.42.0.35 pi-master

I'm experiencing the same error. I do have an instance of NGINX running though.

Love the video and documentation on PIHOLE in kubernetes.

It appears pihole does not have a ssh interface. I would love to add new dns entries into the /etc/custom.list file.

Do you know of a way? My kubernetes storage I located in longhorn so its not possible to bind mount from kubernetes.

HypriotOS/armv7: root@master in ~/cluster-monitoring

# make vendor

Installing jsonnet-bundler

can't load package: package github.com/jsonnet-bundler/jsonnet-bundler/cmd/jb@ latest: cannot use path@ version syntax in GOPATH mode

make: *** [Makefile:52: /root/go/bin/jb] Error 1

HypriotOS/armv7: root@ master in ~/cluster-monitoring

#

I don't know enough about the GO language to resolve the deprecated syntax and update the Makefile. I've gone down quite a few rabbit holes without success...

Hit with the same error.

"Installing jsonnet-bundler

package github.com/jsonnet-bundler/jsonnet-bundler/cmd/jb@latest: can only use path@version syntax with 'go get'

make: *** [Makefile:52: /home/pi/go/bin/jb] Error 1"

Steps I took - did not help resolve the error

1. added export GO111MODULE=on to .profile.

2 go mod init cluster-monitoring

3. suppose to execute go mod download repo@version ( i have no idea what is the repo@version )

ended here still searching but ....

Yep. Tried the same things….. :(

I removed the old go version 1.11 and replaced it with new binaries of a recent version (1.18.7 in my case).

Then the make process executes properly. There was a big change from 1.11 onwards.

the board doesn't accept my command...maybe bc of the code.

Hope it helps