Drupal.org has an excellent resource page to help you create a static archive of a Drupal site. The page references tools and techniques to take your dynamically-generated Drupal site and turn it into a static HTML site with all the right resources so you can put the site on mothballs.

From time to time, one of Midwestern Mac's hosted sites is no longer updated (e.g. LOLSaints.com), or the event for which the site was created has long since passed (e.g. the 2014 DrupalCamp STL site).

I though I'd document my own workflow for converting typical Drupal 6 and 7 sites to static HTML to be served up on a simple Apache or Nginx web server without PHP, MySQL, or any other special software, since I do a few special things to preserve the original URL alias structure, keep CSS, JS and images in order, and make sure redirections still work properly.

1 - Disable forms and any non-static-friendly modules

The Drupal.org page above has some good guidelines, but basically, you need to make sure to all the 'dynamic' aspects of the site are disabled—turn off all forms, turn off modules that use AJAX requests (like Fivestar voting), turn off search (if it's using Solr or Drupal's built-in search), and make sure AJAX and exposed filters are disabled in all views on the site—a fully static site doesn't support this kind of functionality, and if you leave it in place, there will be a lot of broken functionality.

2 - Download a verbatim copy of the site with SiteSucker

CLI utilities like HTTrack and wget can be used to download a site, using a specific set of parameters to make sure the download is executed correctly, but since I only convert one or two sites per year, I like the easier interface provided by SiteSucker.

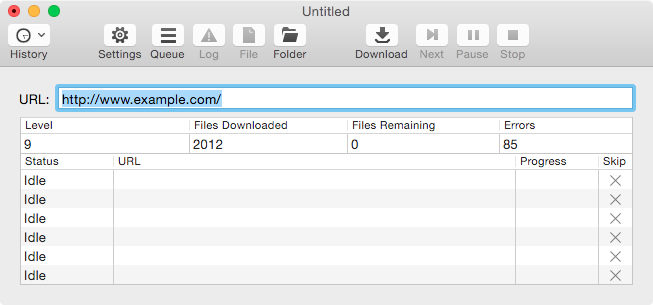

SiteSucker lets you set options for a download (you can save your custom presets if you like), and then it gives a good overview of the entire download process:

I change the following settings from the defaults to make the download go faster and result in a mostly-unmodified download of the site:

- General

- Ignore Robot Exclusions (If you have a slower or shared server and hundreds or thousands of pages on the site, you might not want to check this box—Ignoring the exclusions and the crawler delay can greatly increase the load on a slow or misconfigured webserver when crawling a Drupal site).

- Always Download HTML and CSS

- File Modification: None

- Path Constraint: Host

- Webpage

- Include Supporting Files

After the download completes, I zip up the archive for the site, transfer it to my static Apache server, and set up the virtualhost for the site like any other virtualhost. To test things out, I point the domain for my site to the new server in my local /etc/hosts file, and visit the site.

3 - Make Drupal paths work using Apache rewrites

Once you're finished getting all the files downloaded, there are some additional things you need to configure on the webserver level—in this case, Apache—to make sure that file paths and directories work properly on your now-static site.

A couple neat tricks:

- You can preserve Drupal pager functionality without having to modify the actual links in HTML files by setting

DirectorySlash Off(otherwise Apache will inject an extra/in the URL and cause weird side effects), then setting up a specialized rewrite usingmod_rewriterules. - You can redirect links to

/node(or whatever was configured as the 'front page' in Drupal) to/with anothermod_rewriterule. - You can preserve links to pages that are now also directories in the static download using another

mod_rewriterule (e.g. if you have a page at/archivethat should loadarchive.html, and there are also pages accessible at/archive/xyz, then you need a rule to make sure a request to/archiveloads the HTML file, and doesn't try loading a directory index!). - Since the site is now static, and presumably won't be seeing much change, you can set far future expires headers for all resources so browsers can cache them for a long period of time (see the

mod_expiressection in the example below).

Here's the base set of rules that I put into a .htaccess file in the document root of the static site on an Apache server for static sites created from Drupal sites:

<IfModule mod_dir.c>

# Without this directive, directory access rewrites and pagers don't work

# correctly. See 'Rewrite directory accesses' rule below.

DirectorySlash Off

</IfModule>

<IfModule mod_rewrite.c>

RewriteEngine On

# Fix /node pagers (e.g. '/node?page=1').

RewriteCond %{REQUEST_URI} ^/node$

RewriteCond %{QUERY_STRING} ^page=(.+$)

RewriteRule ^([^\.]+)$ index-page=%1.html [NC,L]

# Fix other pagers (e.g. '/archive?page=1').

RewriteCond %{REQUEST_URI} !^/node$

RewriteCond %{QUERY_STRING} ^page=(.+$)

RewriteRule ^([^\.]+)$ $1-page=%1.html [NC,L]

# Redirect /node to home.

RewriteCond %{QUERY_STRING} !^page=.+$

RewriteRule ^node$ / [L,R=301]

# Rewrite directory accesses to 'directory.html'.

RewriteCond %{REQUEST_FILENAME} -d

RewriteCond %{QUERY_STRING} !^page=.+$

RewriteRule ^(.+[^/])/$ $1.html [NC,L]

# If no extension included with the request URL, invisibly rewrite to .html.

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^([^\.]+)$ $1.html [NC,L]

# Redirect non-www to www.

RewriteCond %{HTTP_HOST} ^example\.com$ [NC]

RewriteRule ^(.*)$ http://www.example.com/$1 [L,R=301]

</IfModule>

<IfModule mod_expires.c>

ExpiresActive On

<FilesMatch "\.(ico|pdf|flv|jpg|jpeg|png|gif|js|css|swf)$">

ExpiresDefault "access plus 1 year"

</FilesMatch>

</IfModule>

Alternative method using a localized copy of the site

Another more time-consuming method is to download a localized copy of the site (where links are transformed to be relative, linking directly to .html files instead of the normal Drupal paths (e.g. /archive.html instead of /archive). To do this, download the site using SiteSucker as outlined above, but select 'Localize' for the 'File Modification' option in the General settings.

There are some regex-based replacements that can clean up this localized copy, depending on how you want to use it. If you use Sublime Text, you can use these for project-wide find and replace, and use the 'Save All' and 'Close All Files' options after each find/replace operation.

I'm adding these regexes to this post in case you might find one or more of them useful—sometimes I have needed to use one or more of them, other times none:

Convert links to index.html to links to /:

- Find:

(<a href=")[\.\./]+?index\.html(") - Replace:

\1/\2

Remove .html in internal links:

- Find:

(<a href="[^http].+)\.html(") - Replace:

\1\2

Fix one-off link problems (e.g. Feedburner links detected as internal links):

- Find:

(href=").+(feeds2?.feedburner) - Replace:

\1http://\2

Fix other home page links that were missed earlier:

- Find:

href="index" - Replace:

href="/"

Fix relative links like ../../page:

- Find:

((href|src)=")[\.\./]+(.+?") - Replace:

\1/\3

Fix relative links in top-level files:

- Find:

((href|src)=")([^/][^http].+?") - Replace:

\1/\3

This secondary method can sometimes make for a static site that's easier to test locally or distribute offline, but I've only ever localized the site like this once or twice, since the other method is generally easier to get going and doesn't require a ton of regex-based manipulation.

Comments

Thank you for your article. I read a similar one by somwone nameed Karen who mntioned this and a few other things. My site will shortly be obsolete as it is drupal 6 and i am not sure what to do, being short of funds and skills. I looked into using openoutreach, a hosted distro or prosepoint, a news oriented distro similar to what tokyoprogressive is dor. Openoutreach people are helpful, but is essentially rebuild the site and a learning curve. Prosepoint people do not respond to my queries. With either site, i am not sure how easy it would be to import content. In any case, it is beyond my ability to rebuild the site in drupal 7 or 8, as it was done for me by others a long time ago and i so not even have the knowledge to maintain it.

whixh is where our artixle comes in. I understand more or less about how to use sitesucker. But i am lost where you mention turning off this and that and making apache server chamges.

What I would like is to pay someone to help me make tokyoprogressive.org a static site, then somehow upload it to a new host where i hope to create a simple blog ( I am using Sandvox these days for Mac). Then I would essentially use the static site as archives and blog away on a simple, maintenance free platform. Do you have any recommendations for heliping me to find such a person? Also, not sure what a fair price would be for such services. Thanks in advance for any advice you can offer.

Paul Arenson

Japan

Tha

Thank you. This was a total life saver. Great article. The only thing in Sitesucker that i couldn't work out is why all the css and js files had '-x' appended to the end of the filename (for example: styles-x.css script-x.js and so on...)? i had we remove every instances of it manually. Apart from that, at least now I have a 90% working static site i can just chuck it onto GitHub Pages. Certainly better than wasting 8 years of work... :)

I also noticed I had

?itok=XYZin image URLs when sitesucker grabbed them on one site. Easiest way to fix that at least, is to add the following to your site's settings.php file before pulling down the site:$conf['image_suppress_itok_output'] = TRUE;