I recently tried setting up an M.2 Coral TPU on a machine running Debian 12 'Bookworm', which ships with Python 3.11, making the installation of the pyCoral library very difficult (maybe impossible for now?).

Some of the devs responded 'just install an older Ubuntu or Debian release' in the GitHub issues, as that would give me a compatible Python version (3.9 or earlier)... but in this case I didn't want to do that.

So the next best option would be to set up the PCIe device following the official guide (so you can see it at /dev/apex_0), then pass it through to a Docker container—which would be easier to set up following Coral's install guide.

Install Docker

I installed Docker using the instructions provided for an apt-based install on Debian:

sudo apt install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/debian/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt update

sudo apt install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

Build a Docker image for Coral testing

Create a Dockerfile with the following contents:

FROM debian:10

WORKDIR /home

ENV HOME /home

RUN cd ~

RUN apt-get update

RUN apt-get install -y git nano python3-pip python-dev pkg-config wget usbutils curl

RUN echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" \

| tee /etc/apt/sources.list.d/coral-edgetpu.list

RUN curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

RUN apt-get update

RUN apt-get install -y edgetpu-examples

It's important to use Debian 10, as that version still has a system Python version old enough to work with the Coral Python libraries.

Build the Docker image, and tag it coral:

sudo docker build -t "coral" .

Run the Docker image and test the TPU

Make sure the device /dev/apex_0 is appearing on your system, then use the following docker run command to pass that device into the container:

sudo docker run -it --device /dev/apex_0:/dev/apex_0 coral /bin/bash

(If you're in the docker group, you can omit the sudo).

This should drop you inside the running container, where you can run an Edge TPU example:

container-id# python3 /usr/share/edgetpu/examples/classify_image.py --model /usr/share/edgetpu/examples/models/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite --label /usr/share/edgetpu/examples/models/inat_bird_labels.txt --image /usr/share/edgetpu/examples/images/bird.bmp

This should work... but in my case I was debugging some other flaky bits in the OS, so it didn't work on my machine.

Special thanks to this comment on GitHub for the suggestion for how to run Coral examples inside a Docker container.

Comments

I use the USB accelerator for a Frigate (camera) install, it has the same performance and wider compatibility. The only thing that needs to be mapped is `--device /dev/bus/usb:/dev/bus/usb`.

If anyone has issues here where the container still can't access the TPU, apparently there is a "rootless docker" problem that can be solved with a udev rule. I followed this: https://gist.github.com/ofstudio/ab8001a21c257d67255c9f43451132c0

(Replacing the IDs with the ones I found for the TPU)

did you manage to find anything for the PCie TPU

I guess you meant Debian 12 Bookworm, not docker :P

Oops! Yes, fixed.

> I recently tried setting up an M.2 Coral TPU on a > machine running Docker 12 'Bookworm'

Did you mean Debian 12 ‘Bookworm’?

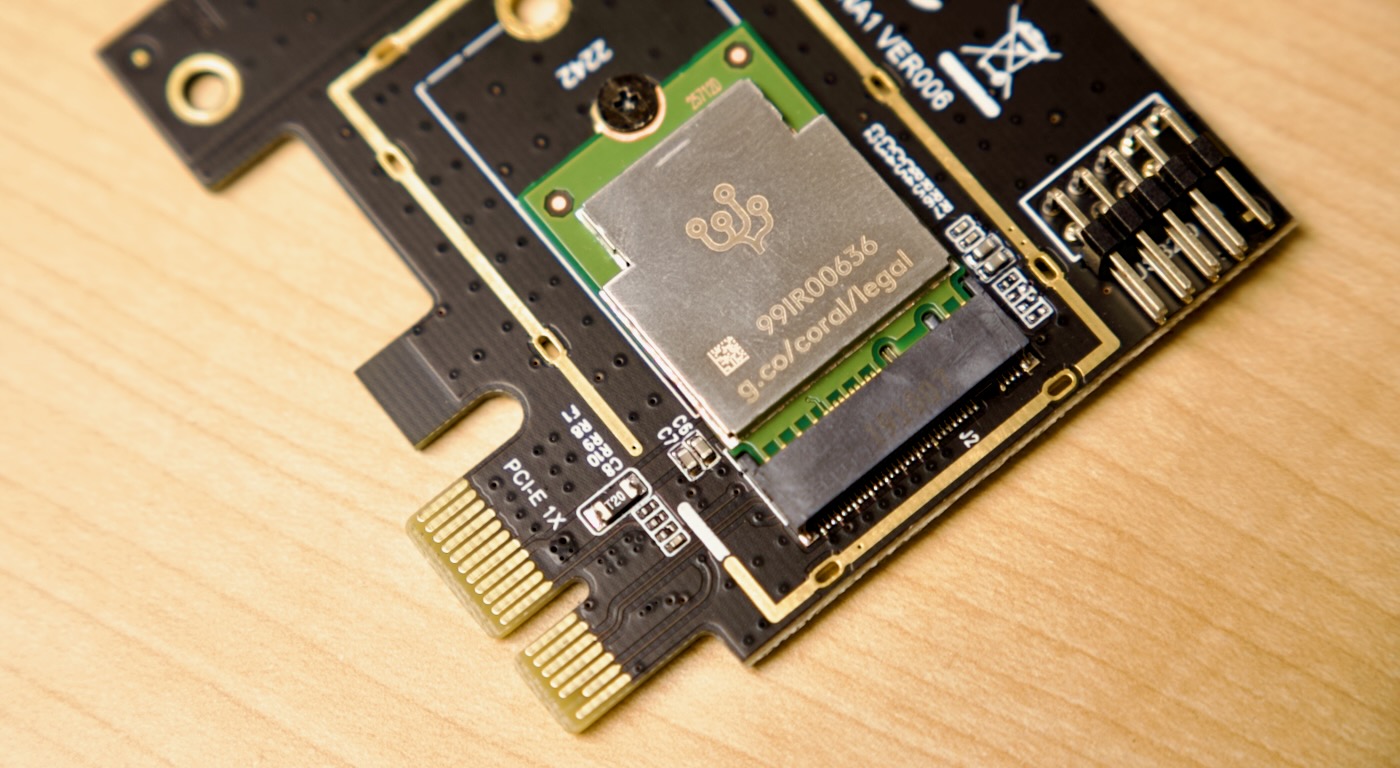

The coral tpu in the picture - is that the dual tpu coral? What is the pcie adapter to which it is connected? Can you share the link to the pcie adapter, please?

Radha.

That's a single TPU; I have a dual, but it requires a rather exotic M.2 slot that most motherboards don't include.

The adapter board I'm using is a PCIe to A+E adapter, similar to this one.

Thank you I bought a tpu months ago but couldn't find the correct adapter that I trusted that was the mini pcie to pcie 1x was way more difficult than I had expected

I recently, similarly, passed through the USB one into an lxd container for the same reason. It looks like the Coral team has abandoned it in the last 6 months.

Dual accelerator requires either full m.2 slot (not CNVe or whatever the name is) or adapter. Amazon will have 50% which won't work at all and 50% which will pass single chip only. There is a hope: search for Coral TPU adapter on Makerfabs. Got one of those. Now 2 chips are visible and fully functional.

Couldn't you just use miniconda to create an environment for the coral and install Python 3.9 and libraries that way? It installs in the home directory and can easily be removed but you get your full native system to use.

@Jeff, if I understand correctly you did not have any problems installing a Coral EdgeTPU driver on Debian 12 Bookworm, as your only complaint is about Phyton incompatibility. I attempted to install the Coral driver (aka Gasket DKMA) a few months later than you did, the first week of Jan 2024. I followed Coral's instructions to the letter but I was unsuccessful. I even went as far as building a deb package from the source on GitHub, as was suggested on the GitHub discussion thread. Building the package did not raise any errors but I still could not install it as numerous headers were missing in the system that could not have been installed. I gave up and re-installed Debian 10 in place of Debian 12. After that all pieces fell in place and everything worked. I haven't tried Debian 11, though, so don't know how far back the Coral software incompatibility goes. But I am concerned, that Coral may have abandoned it altogether to replace it with something new. What do you think?

I only got it working through Docker, not in Debian 12 directly. I agree it seems like Google is not as interested in keeping Coral moving forward these days :(

@Alex T, I was excited about the prospect of the m.2 coral in my frigate NVR, but after failing to get it to appear under my Arch system (had to rely on AUR) as well as LMDE, I was encouraged by your comment here. I installed debian 10 and followed the Coral website, but still nothing in lspci etc. just curious if you had to do anything beyond the Coral instruction steps. Thank you!

Any chance you've tried and got the usb accelerator working on fedora?

I'm having an issue with this. /dev/apex_0 is showing up in both the host and container, but in the container I get the following error when I try the demo code

RuntimeError: Error in device opening (/dev/apex_0)!

Similarly, I had previously attempted to run this directly on the host using a package that maps alternate python versions to specific directories, but I saw the same error

I have the same issue. Followed the instructions and got into the container. It gives an error when trying to access /dev/apex_0:

These directions no longer work

admin@rpi5b:~/frigate $ sudo docker run -it --device /dev/apex_0:/dev/apex_0 coral /bin/bash

root@1aacc1561983:~# python3 /usr/share/edgetpu/examples/classify_image.py --model /usr/share/edgetpu/examples/models/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite --label /usr/share/edgetpu/examples/models/inat_bird_labels.txt --image /usr/share/edgetpu/examples/images/bird.bmp

Traceback (most recent call last):

File "/usr/share/edgetpu/examples/classify_image.py", line 54, in

main()

File "/usr/share/edgetpu/examples/classify_image.py", line 44, in main

engine = ClassificationEngine(args.model)

File "/usr/lib/python3/dist-packages/edgetpu/classification/engine.py", line 48, in __init__

super().__init__(model_path)

File "/usr/lib/python3/dist-packages/edgetpu/basic/basic_engine.py", line 92, in __init__

self._engine = BasicEnginePythonWrapper.CreateFromFile(model_path)

RuntimeError: Error in device opening (/dev/apex_0)!

Can you check the

dmesglogs on the Pi to see if there are any errors fromapex. And also look inside/devto make sure you seeapex_0in there.Hi Jeff,

I was able to check with pycoral in python3 inside a test container following your other instructions and it shows up just fine in there. Here is the output that you requested as well:

admin@rpi5b:~/frigate $ dmesg | grep apex

[ 6679.846989] apex 0000:01:00.0: Apex performance not throttled due to temperature

[ 6684.966972] apex 0000:01:00.0: Apex performance not throttled due to temperature

[ 6690.086952] apex 0000:01:00.0: Apex performance not throttled due to temperature

[ 6695.206933] apex 0000:01:00.0: Apex performance not throttled due to temperature

Apex_0 does show up on the host and inside the container:

admin@rpi5b:/dev $ ls /dev/apex_0

/dev/apex_0

Also here is output from Python3 running from within a test container:

>>> from pycoral.pybind._pywrap_coral import ListEdgeTpus as list_edge_tpus

>>> list_edge_tpus()

[{'type': 'pci', 'path': '/dev/apex_0'}]

[ 9330.706197] apex 0000:01:00.0: RAM did not enable within timeout (12000 ms)

[ 9330.706206] apex 0000:01:00.0: Error in device open cb: -110

admin:/dev $ [ 9330.706197] apex 0000:01:00.0: RAM did not enable within timeout (12000 ms)

[ 9330.706206] apex 0000:01:00.0: Error in device open cb: -110

What kind of adapter are you using with the Coral? I did have that problem with any adapter using a straight FFC cable (usually the white variety) that doesn't have impedance-controlled traces.

I only had success using Pineberry Pi's FFC, which is a bit thicker and has impedance-controlled traces on it. The timing requirements for the Coral seem very picky, and lower quality cables (or a weak or not-fully-inserted connection) definitely causes that issue.

See my debugging in this GitHub issue.

I was using a white cable I ordered from Amazon with blue ends. I tried the pineberry cable that I have and then the device doesn't show up at all with that cable

OMG that was it. I reseated the cable and it works and frigate was able to use it immediately. Wow. I have been going over this and over this forever. Thank you!

Yay! Now just be very careful with that cable—they are a bit fragile!

Wow - I had been struggling as well. I reseated the cable and ensured it was a good fit. And it worked! Amazing - Never forget about layer 1.

Thanks

Made the mistake of upgrading my perfectly working pi5. someone mentioned they changed the device tree in the latest version? I redid the steps outline but its not working.

Are there newer setup instructions for the device tree part?

Hi Jeff and All,

I had the same problem:

RuntimeError: Error in device opening (/dev/apex_0)!

I managed to fix following the instructions in Jeff's Github post:

1. Add pcie_aspm=off to /boot/cmdline.txt and reboot - this is not done by Dataslayer's script

2. Replace msi-parent <0x2d> under pcie@110000 by <0x66> in the device tree - note Jeff's instructions are to find <0x2f>, but probably due to a recent update this has been replaced by <0x2d>, so Dataslayer's script misses it.

Hope this helps,

Bigfin

Hey, ya'll I appreciated this post. However, I recently created a Raspberry Pi 5 - Google Coral Edge M.2 TPU installation guide that does not require docker, can run natively, has a newer Python version (3.9.16), and has the PyCoral library.

Using the Coral TPU on Python 3.11 is now possible! The PyCoral library is still a bit buggy but if you use the TFLite runtime directly it runs very smooth. Thanks feranick! More info available here: https://github.com/google-coral/pycoral/issues/137