If you've ever had to transfer a file from one computer to another over the Internet, with minimal fuss, there are a few options. You could use scp or rsync if you have SSH access. You could use Firefox Send, or Dropbox, or iCloud Drive, or Google Drive, and upload from one computer, and download on the other.

But what if you just want to zap a file from point A to point B? Or what if—like me—you want to see how fast you can get an individual file from one place to another over the public Internet?

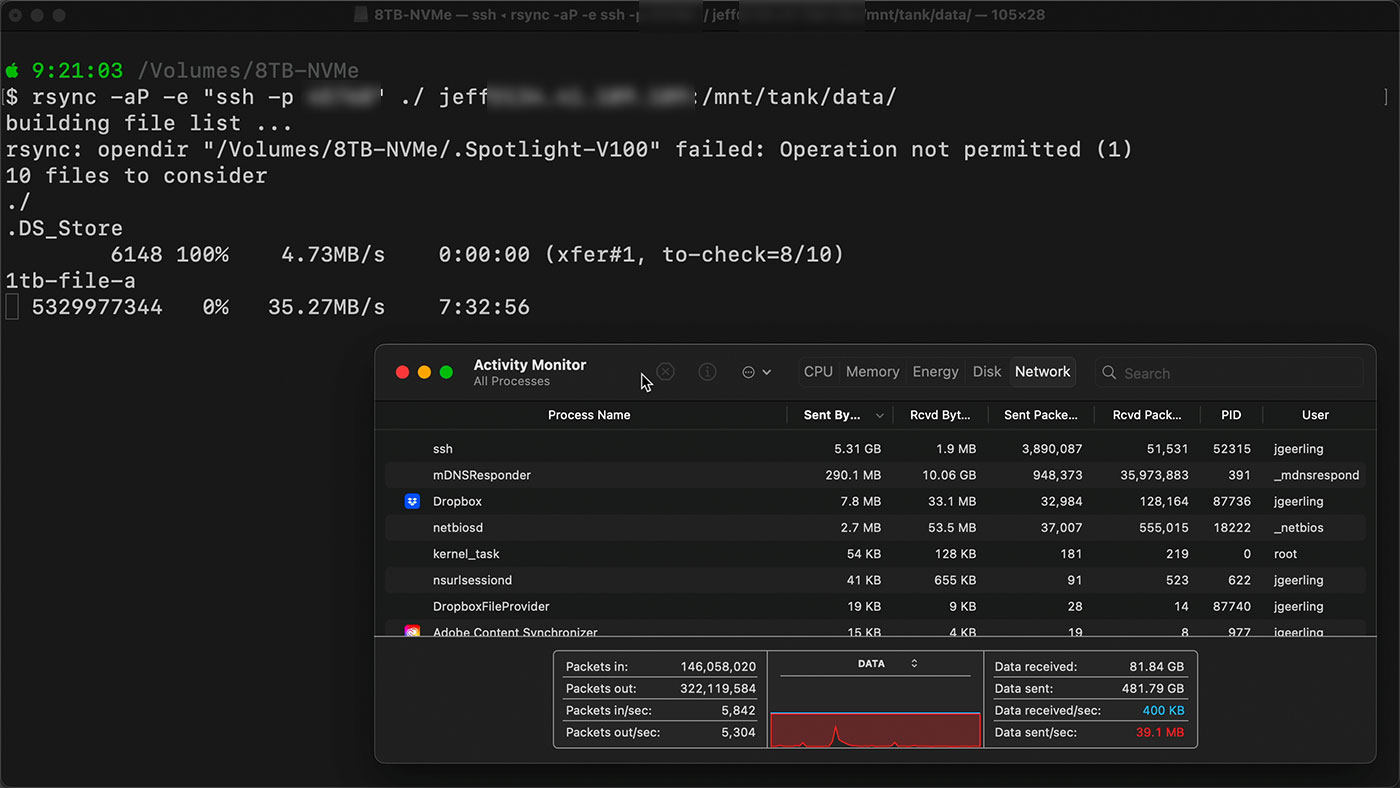

I first attempted to do this over SSH using scp and rsync, but for some reason (even though both computers could get 940 Mbps up and down to speedtest or Cloudflare), that maxed out around 312 Mbps (about 39 MB/s). I even tunneled iperf3 through SSH and could only get a maximum around 400 Mbps. I'm not sure if it was something on the ISP level (either Bell Canada or AT&T throttling non-HTTP traffic?), but the CPU on both machines was only hitting 10-13% max, so I don't think it was an inherent limitation of SSH encryption.

Why should I care about getting speeds greater than 300 Mbps for single-file transfers? That question will be answered soon ;)

Short of running an open FTP server, or Samba over the Internet, my next favorite option is magic-wormhole.

If you've never used it, it truly is magic:

# On both computers:

[apt|dnf|snap|brew|choco] install magic-wormhole

# On the source computer:

wormhole send file-a

# On the recipient computer:

wormhole receive [paste phrase generated on source computer here]

It's worked great for years, and yes—it does rely on a public relay to send data from computer to computer, so you have to trust the relay (and the encryption). There are Known Vulnerabilities, so I wouldn't think about sending over state secrets... but for most other types of data, I'm not worried. I just want to send a file to another computer.

The Problem

But magic wormhole was also only giving me speeds around 42 MB/s, only a slight improvement over SSH-based transfer. And that speed wasn't stable—it would fluctuate, presumably as others were using the public relay.

Wormhole can do direct encrypted P2P transfers, but that requires fairly open networks between the machines (NAT and such can make this very tricky to pull off). So usually it falls back to the public relay.

So I thought... I wonder if I could run my own relay, on a faster, dedicated server, and use that? Well, it turns out, you can! Enter magic-wormhole-transit-relay.

Setting up my own transit-relay server

The documentation was a tiny bit sparse for someone unfamiliar with Python's Twisted library, so I submitted a PR to remedy that.

Basically, you need a machine that can handle whatever link speeds you need (in my case, I was hoping for symmetric 1 Gbps up and down over a public IP), and I chose to run a DigitalOcean Droplet—a 4GB Basic droplet—with Ubuntu 22.04.

Once it was up, I ran a dist-upgrade, rebooted, then:

# Install Python 3 pip and twist

apt install python3-pip python3-twisted

# Install magic-wormhole-transit-relay

pip3 install magic-wormhole-transit-relay

# Run transit-relay in the background

twistd3 transitrelay

# Check on logs

cat twistd.log # or `tail -f twistd.log`

# (Once finished) kill transit-relay

kill `cat twistd.pid`

So I ran it, and instead of just wormhole send file-a, I specified my custom transit-relay server:

wormhole send --transit-helper=tcp:[server public ip here]:4001 file-a

I copied the receive command, pasted it on the destination server, and got... about 50 MB/s. It would jump up to 60-70 MB/sec for a minute, then slow back down to 50, and kept going back and forth. Better, but not amazingly stable, and still far from a full gigabit (about 110 MB/s). I really wanted to max out my gigabit connection!

Using iftop, I could see the Droplet seemed to equalize the send and receive over the public interface, both around 500-600 Mbps.

DigitalOcean says the maximum throughput on a standard Droplet is "up to 2 Gbps", but maybe they try to limit the public interface to 1 Gbps total? Not sure.

Going Faster

Next I spun up an 8 GB CPU-optimized 'premium' droplet, since this class is reported to have 10 Gbps connections, and I set up transit-relay on it.

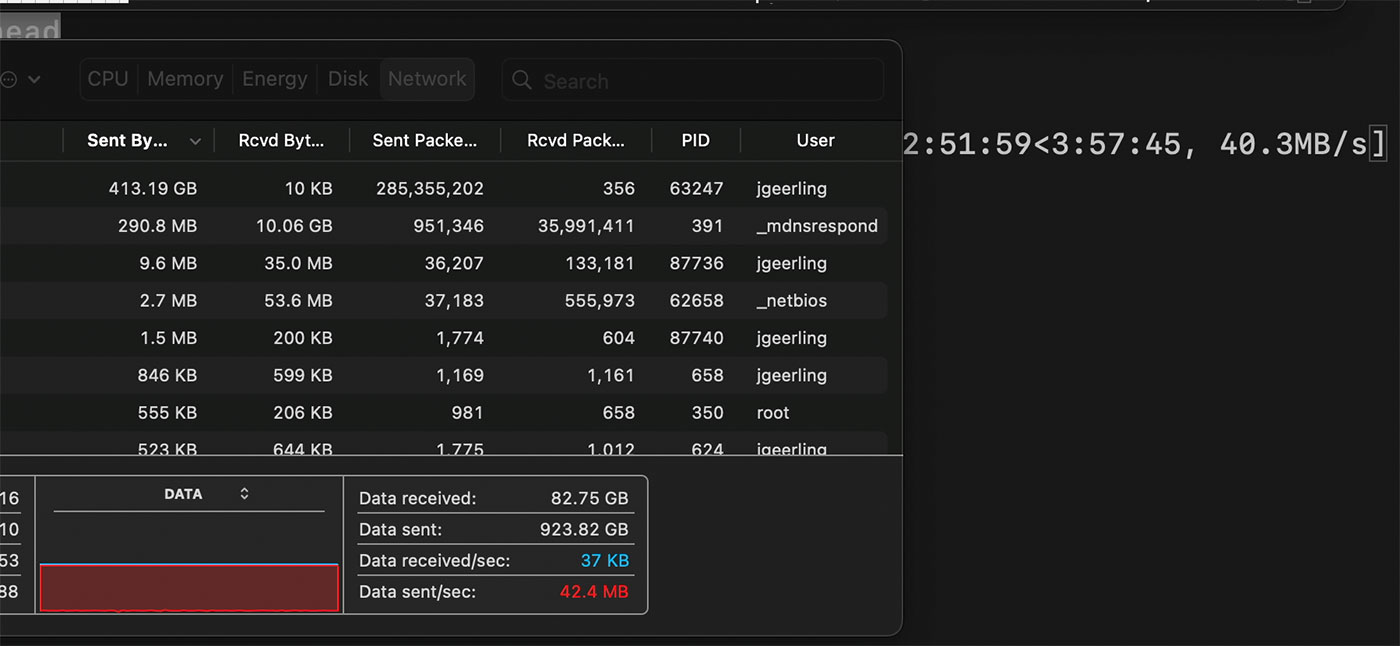

This time, my transfer stabilized at 75 MB/sec (about 600 Mbps) and stayed there. Not an amazing speed improvement, but at least it was stable! I'm wondering now if there's any way to direct transfer a file, encrypted, between two consumer Internet connections at a full gigabit short of proxying it through HTTP!

Maybe it's just Bell/AT&T, or something in the router on one end or another. I wish I didn't have to use the AT&T-provided Fiber router, because I don't have a lot of insight into what it's doing. My own router was not having any trouble, and could've put through the full gigabit easily.

I'd love to hear what other people do for direct gigabit+ file transfer from one location to another (outside of data centers, where the connections and configuration are reliable and fast as a rule!). In the end, I have learned a good deal about magic-wormhole, and about testing consumer-to-consumer ISP connections—and there's always much more to do!

Edit: Many have recommended

croc, which seems to be very similar towormhole, but written in Go instead of Python. I have tried it a few times with files varying from 5-10 GB, and sometimes it seems to settle in around 54 MB/second:Sending (->1.2.3.4:49860) chuck.MP4 100% |████████████████████| (7.7/7.7 GB, 54 MB/s)Other times it can saturate my gigabit connection using compression:

chuck.MP4 100% |████████████████████| (7.7/7.7 GB, 107 MB/s)But if I use

--no-compress, I end up getting very spiky behavior at least over public relay (Getting between 15-30 MB/sec over long term average.)You can skip the lengthy default hash algorithm and use imohash (

croc send --hash imohash) if you're working on massive files like I am.Definitely something to consider trying, though! Running your own relay seems like it may be a little easier, too (

croc relay), but I was having some trouble with the sender quitting once I tried using my own relay.

Comments

# On both computers:

[apt|dnf|snap|brew|choco] install magic-wormhole

# On the source computer:

wormhole send file-a

# On the recipient computer:

wormhole recieve [paste phrase generated on source computer here]

Is "receive" really spelled wrong in the software? :-)

Heh, oops! Only in my own writing! Fixed that.

I assume you didn’t have IPv6 available on the two ends. I guess that would have avoid going through this hassle.

Considering that 99.999999999999999998% of well-known tech YouTubers ignore the existence of current version of Internet Protocol with all their forces and continue doing things like it's 1970, preferring the hassle of doing the impossible to traverse multiple layers of NAT and whatever may have in the data path instead of doing the right thing and enjoy the end-to-end communication we all (should) know and love...

Also, home broadband links use shared infrastructure and the guaranteed bandwidth is only between the endpoint (house) and ISP PoP. What happens forwards the PoP, how good or bad the transit link is at that specific moment, we'll never know.

Unfortunately using IPv6 is not always possible—it assumes all the equipment, software, and ISP transit along the way will handle it correctly... and it just doesn't happen that way unless you have more control over your stacks.

I do, on my end at least, but I don't have any control over the other end in this case, nor do I have control over the ISP networks. I'm guessing AT&T Fiber supports IPv6 though. Haven't spent time messing with it yet. I think it's insane that some ISPs still require NAT when you're trying to go IPv6-only :/

Did you try using taildrop ?

How about rsync directly rather than over ssh? If you don't need encryption it might be faster, though I usually can't be bothered setting it up and just wait on ssh.

Have you tried croc? Its a file transfer tool written in Golang. I havent tested the speeds but it always workes perfectly for me.

croclooks extremely similar in architecture towormhole, so I'll give it a try too!About the SSH file transfer speed limitations: from my experience that's due to the SSH connection being single-threaded, it should be apparent if you check the CPU usage via htop. Between an AMD Ryzen 7 5700G and an Intel i7-8565U I also see about 40-ish MB/s transfer speeds. Downloading files from Nextcloud over the same network yields much better speeds, 80-100+MB/s, so it is not a hardware limitation.

You might be able to play around with SSH ciphers, but my testing did not yield any noticeable improvements. I might have just done something wrong there as well. Or maybe someone has figured out a way to send files while opening multiple SSH connections in parallel. Either way it's an interesting topic to look into.

We have a different use case, where we need a mirrored directory at 12 locations in the world. Each site can add things, but in the end, they need to be “eventually consistent.”

We currently use Resilio, since it’s highly cross platform, and darn easy to setup.

The bonus being not the individual speeds really, some of our locations are fairly Austere. No amount of protocol speed can make an uplink like that work.

Instead Resilio (Open Source, Sync Thing, is an identical option), uses basically the Napster Approach. The more peers have the files, the greater the throughput. When we setup a last newest site, the other 11 peers Force fed it from zero, to its 1TB full weight at 238MB/s.

Very interesting article. But I am https://nitpicking.com, so ...

Quote: "wormhole recieve [paste phrase generated on source computer here]"

Recieve?

Whoops! Fixed!

While I don't expect WebRTC (Tech in Web Browsers for P2P Audio/Video and Data transfer) to be GBitish, the protocol is optimized as it's used very widely.

Maybe try veghfile and sharedrop

PS: Of course, tools like SyncThing (OSS, multi-platform) are the thing to use to sync entire directory trees, also large ones, works great with PIs and Andiod and Windows/Linux (I hope, Mac too) to keep files syncronized (even bidirectional)

My preferred alternative is croc: https://github.com/schollz/croc