tl;dr: Get the Vagrant profile for Drupal/PHP Continuous Integration Server from GitHub, and create a new VM (see the README on the GitHub project page). You now have a full-fledged Jenkins/Phing/SonarQube server for PHP/Drupal CI.

In this post, I'm going to explain how Jenkins, Phing and SonarQube can help you with your Drupal (or hany PHP-based project) deployments and code quality, and walk you through installing and configuring them to work with your codebase. Bear with me... it's a long post!

Code Deployment

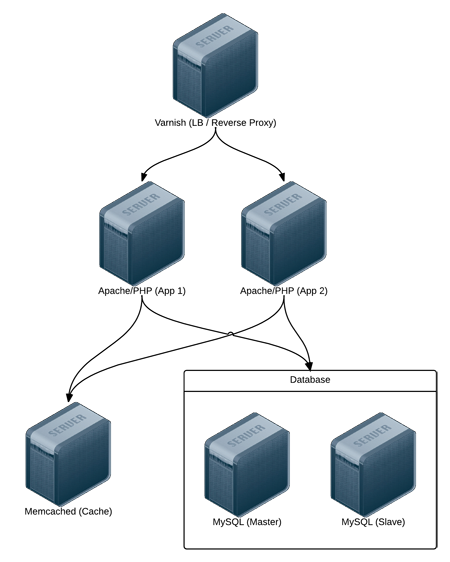

If you manage more than one environment (say, a development server, a testing/staging server, and a live production server), you've probably had to deal with the frustration of deploying changes to your code to these servers.

In the old days, people used FTP and manually copied files from environment to environment. Then FTP clients became smarter, and allowed somewhat-intelligent file synchronization. Then, when version control software became the norm, people would use CVS, SVN, or more recently Git, to push or check out code to different servers.

All the aforementioned deployment methods involved a lot of manual labor, usually involving an FTP client or an SSH session. Modern server management tools like Ansible can help when there are more complicated environments, but wouldn't everything be much simpler if there were an easy way to deploy code to specific environments, especially if these deployments could be automated to either run on a schedule or whenever someone commits something to a particular branch?

Enter Jenkins. Jenkins is your deployment assistant on steroids. Jenkins works with a wide variety of tools, programming languages and systems, and allows the automation (or radical simplification) of tasks surrounding code changes and deployments.

In my particular case, I use a dedicated Jenkins server to monitor a specific repository, and when there are commits to a development branch, Jenkins checks out that branch from Git, runs some PHP code analysis tools on the codebase using Phing, archives the code and other assets in a .tar.gz file, then deploys the code to a development server and runs some drush commands to complete the deployment.

Static Code Analysis / Code Review

If you're a solo developer, and you're the only one planning on ever touching the code you write, you can use whatever coding standards you want—spacing, variable naming, file structure, class layout, etc. don't really matter.

But if you ever plan on sharing your code with others (as a contributed theme or module), or if you need to work on a shared codebase, or if there's ever a possibility you will pass on your code to a new developer, it's a good idea to follow coding standards and write good code that doesn't contain too many WTFs/min.

![php[tek] 2016 logo](https://www.jeffgeerling.com/sites/default/files/images/phptek-2016-logo.png)