I've had a number of people ask about my backup strategy—how I ensure the 6 TB of video project files and a few TB of other files stays intact over time.

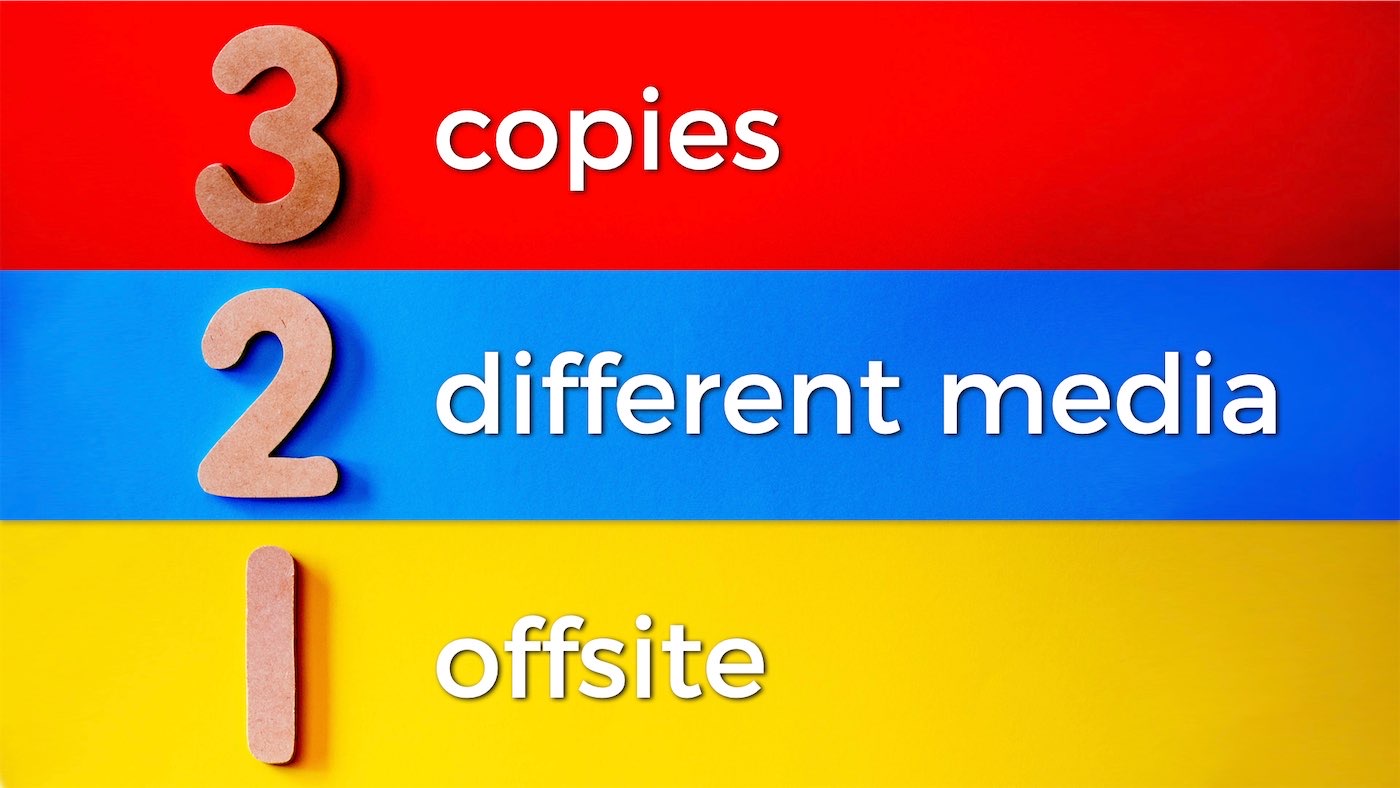

Over the past year, since I got more serious about my growing YouTube channel's success, I decided to document and automate as much of my backups as possible, following a 3-2-1 backup plan:

- 3 Copies of all my data

- 2 Copies on different storage media

- 1 Offsite copy

The culmination of that work is this GitHub repository: my-backup-plan.

The first thing I needed to do was take a data inventory—all the files important enough for me to worry about fell into six main categories:

For each category, I have at least three copies, on different storage media (locally on my main Mac and NAS, or on my primary and secondary NAS in the case of video files), and one copy in the cloud (some data uses cloud storage, other data is rcloned to AWS Glacier (using an S3 Glacier-backed bucket).

I manage rclone and automated gickup runs for Git backups) on my 'backup Pi', which is managed via Ansible and has a few simple scripts and cron jobs to upload to AWS direct from my NAS.

This allows me to have full disaster recovery quickly if just my main computer or primary NAS dies, and a little slower if my house burns down or someone nukes St. Louis (hopefully neither of those things happens...).

Many people have asked about Glacier pricing, also about how expensive retrieval is. Well, for storage, it costs about $4/month for more than 6 TB of data. Retrieval is more expensive, and there was one instance where I needed to spend about $5 to pull down 30 GB of data as quickly as possible... but that's not the main annoyance with Glacier.

The main problem is it took over 12 hours—since I'm using Deep Archive—to even start that data transfer, since the data had to be brought back from cold storage.

But it's a price I'm willing to pay, to save a ton on the monthly costs, and to have a dead-simple remote storage solution (rclone is seriously awesome, and simple).

Anyways, for even more detail about my backups, check out my latest video on YouTube:

And be sure to check out my GitHub repository, which goes into a LOT more detail: my-backup-plan.

Comments

"Retrieval is more expensive, and there was one instance where I needed to spend about $5 to pull down 30 TB of data as quickly as possible.."

I get that 5$ is more expensive that 4$ but I'm still going to ask if that was a typo.

Oops... that should be GB, not TB!

About 11-12 years ago, I thought I had a good backup plan. I was running Windows at the time, using Carbonite for cloud backup, and had a NAS for onsite backup. 3-2-1 plan. There was a hole in that plan. I managed to delete several folders with RAW photos that I didn't discover missing for several months. By that time, Carbonite had rolled them off the backups and they were no where to be found. I now am running more of a 6-5-4-3-2-1 plan with snapshots and archival storage. Local NAS (RAID 6 with 8x10TB drives) that has critical backed up nightly to an external drive. NAS is backed up to B2 in the cloud. Computers backup to NAS, Backblaze, and shared files in DropBox that are backed up to everything else also. I know it is overkill, but I haven't lost anything since! :)

Hi, retarted youtube didn't wanted me to post this in comment, so i'll instead post it here

"it's hard to automate backups for most consumer networking gear"

That being said presenting Mikrotik.

Same Mikrotik which has scheduler, is able to run scripts in timely manner and literally everything you need is to /export file=today.rsc followed by RPi scping this file to asustor ;d

Same goes for router, most of them (and i am talking about consumer grade, cheap ass tplinks as well as dryteks) has this or other form of "configuration backup", usually being triggered by single POST to embedded µhttpd.

For a guy who literally automates daily coffee brewing with Ansible writing a playbook which authenticates to router and issues config dump should take less than one afternoon. (mikrotik, openwrt and tplink gear user here :))

It seems like most of the Mikrotik automation is for RouterOS, but I'm running SwitchOS.

my response: have you tried following https://forum.mikrotik.com/viewtopic.php?p=846060

Hi Jeff

Have you given any thought to Ransomware protection (e.g. on your NAS / mount points) in your backup strategy? I have a Synology and looking at differences between synology drive client, windows file history to windows share or direct share mounting. I don't really want to have duplicate backups going to the same location. Would a proper network backup via named pipe be safer if there is no direct network write access to share?

Thanks.

James....

That's one thing I didn't touch on here; having a weekly snapshot that's offline (or monthly/quarterly to save on money/bandwidth) would be a good protection too.

For my needs, Glacier is offline but if something crypto'd my digital life, if I didn't halt my backup job the next Sunday it would propagate to my Glacier backup too (though nothing that was already backed up already would be destroyed).